Welcome to Apache CloudStack’s Documentation¶

We have a number of guides, starting with a guide to cloudstack’s terminology and concepts, moving through some information about possible topologies. We then have a quick start guide to help you get a very simple cloudstack up and running. Followed by the full installation guide, an administrator’s guide and then further detailed guides on complex configurations.

Information can also be found at CloudStack’s wiki https://cwiki.apache.org/confluence/display/CLOUDSTACK/Home and on cloudstack mailing lists http://cloudstack.apache.org/mailing-lists.html

CloudStack Concepts and Terminology¶

This is the Apache CloudStack installation guide. In this guide we first go through some design and architectural to build your cloud.

Concepts and Terminolgy¶

What is Apache CloudStack?¶

Apache CloudStack is an open source Infrastructure-as-a-Service platform that manages and orchestrates pools of storage, network, and computer resources to build a public or private IaaS compute cloud.

With CloudStack you can:

- Set up an on-demand elastic cloud computing service.

- Allow end-users to provision resources

What can Apache CloudStack do?¶

Multiple Hypervisor Support¶

CloudStack works with a variety of hypervisors and hypervisor-like technologies. A single cloud can contain multiple hypervisor implementations. As of the current release CloudStack supports:

- BareMetal (via IPMI)

- Hyper-V

- KVM

- LXC

- vSphere (via vCenter)

- Xenserver

- Xen Project

Massively Scalable Infrastructure Management¶

CloudStack can manage tens of thousands of physical servers installed in geographically distributed datacenters. The management server scales near-linearly eliminating the need for cluster-level management servers. Maintenance or other outages of the management server can occur without affecting the virtual machines running in the cloud.

Automatic Cloud Configuration Management¶

CloudStack automatically configures the network and storage settings for each virtual machine deployment. Internally, a pool of virtual appliances support the operation of configuration of the cloud itself. These appliances offer services such as firewalling, routing, DHCP, VPN, console proxy, storage access, and storage replication. The extensive use of horizontally scalable virtual machines simplifies the installation and ongoing operation of a cloud.

Graphical User Interface¶

CloudStack offers an administrators web interface used for provisioning and managing the cloud, as well as an end-user’s Web interface, used for running VMs and managing VM templates. The UI can be customized to reflect the desired service provider or enterprise look and feel.

API¶

CloudStack provides a REST-like API for the operation, management and use of the cloud.

AWS EC2 API Support¶

CloudStack provides an EC2 API translation layer to permit the common EC2 tools to be used in the use of a CloudStack cloud.

High Availability¶

CloudStack has a number of features to increase the availability of the system. The Management Server itself may be deployed in a multi-node installation where the servers are load balanced. MySQL may be configured to use replication to provide for failover in the event of database loss. For the hosts, CloudStack supports NIC bonding and the use of separate networks for storage as well as iSCSI Multipath.

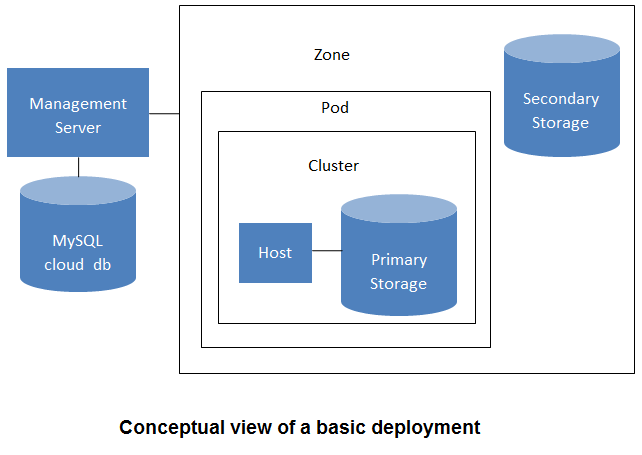

Deployment Architecture Overview¶

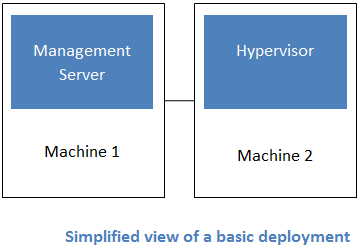

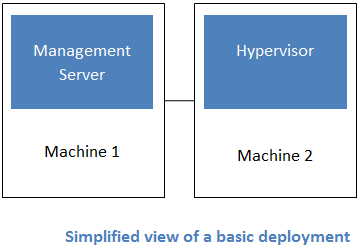

Generally speaking, most CloudStack deployments consist of the management server and the resources to be managed. During deployment you inform the management server of the resources to be managed, such as IP address blocks, storage devices, hypervisors, and VLANs.

The minimum installation consists of one machine running the CloudStack Management Server and another machine to act as the cloud infrastructure (in this case, a very simple infrastructure consisting of one host running hypervisor software). In its smallest deployment, a single machine can act as both the Management Server and the hypervisor host (using the KVM hypervisor).

A more full-featured installation consists of a highly-available multi-node Management Server installation and up to tens of thousands of hosts using any of several networking technologies.

Management Server Overview¶

The management server orchestrates and allocates the resources in your cloud deployment.

The management server typically runs on a dedicated machine or as a virtual machine. It controls allocation of virtual machines to hosts and assigns storage and IP addresses to the virtual machine instances. The Management Server runs in an Apache Tomcat container and requires a MySQL database for persistence.

The management server:

- Provides the web interface for both the adminstrator and end user.

- Provides the API interfaces for both the CloudStack API as well as the EC2 interface.

- Manages the assignment of guest VMs to a specific compute resource

- Manages the assignment of public and private IP addresses.

- Allocates storage during the VM instantiation process.

- Manages snapshots, disk images (templates), and ISO images.

- Provides a single point of configuration for your cloud.

Cloud Infrastructure Overview¶

Resources within the cloud are managed as follows:

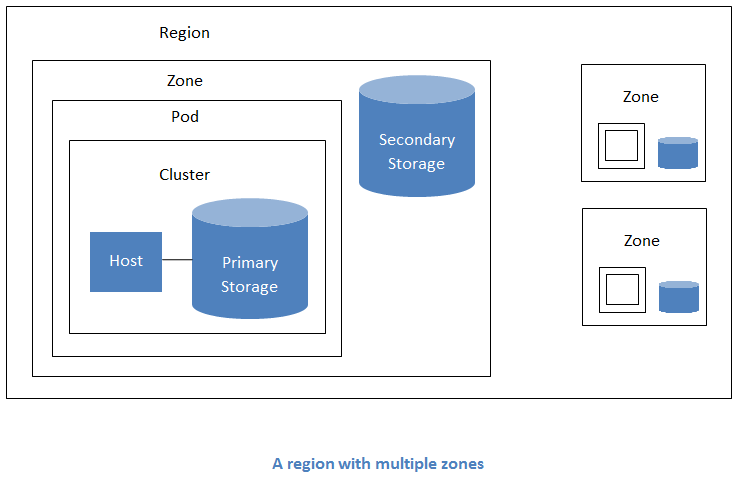

- Regions: A collection of one or more geographically proximate zones managed by one or more management servers.

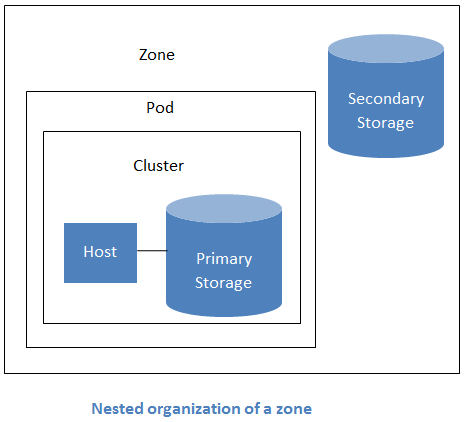

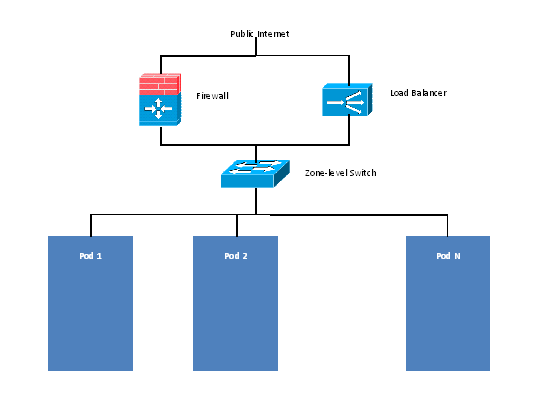

- Zones: Typically, a zone is equivalent to a single datacenter. A zone consists of one or more pods and secondary storage.

- Pods: A pod is usually a rack, or row of racks that includes a layer-2 switch and one or more clusters.

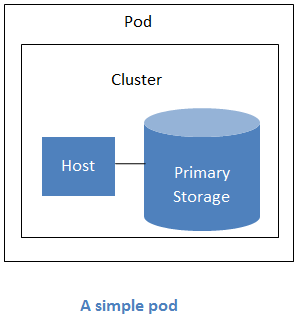

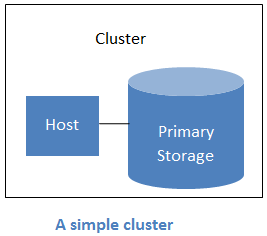

- Clusters: A cluster consists of one or more homogenous hosts and primary storage.

- Host: A single compute node within a cluster; often a hypervisor.

- Primary Storage: A storage resource typically provided to a single cluster for the actual running of instance disk images. (Zone-wide primary storage is an option, though not typically used.)

- Secondary Storage: A zone-wide resource which stores disk templates, ISO images, and snapshots.

Networking Overview¶

CloudStack offers many types of networking, but they typically fall into one of two scenarios:

- Basic: Most analogous to AWS-classic style networking. Provides a single flat layer-2 network where guest isolation is provided at layer-3 by the hypervisors bridge device.

- Advanced: This typically uses layer-2 isolation such as VLANs, though this category also includes SDN technologies such as Nicira NVP.

CloudStack Terminology¶

About Regions¶

To increase reliability of the cloud, you can optionally group resources into multiple geographic regions. A region is the largest available organizational unit within a CloudStack deployment. A region is made up of several availability zones, where each zone is roughly equivalent to a datacenter. Each region is controlled by its own cluster of Management Servers, running in one of the zones. The zones in a region are typically located in close geographical proximity. Regions are a useful technique for providing fault tolerance and disaster recovery.

By grouping zones into regions, the cloud can achieve higher availability and scalability. User accounts can span regions, so that users can deploy VMs in multiple, widely-dispersed regions. Even if one of the regions becomes unavailable, the services are still available to the end-user through VMs deployed in another region. And by grouping communities of zones under their own nearby Management Servers, the latency of communications within the cloud is reduced compared to managing widely-dispersed zones from a single central Management Server.

Usage records can also be consolidated and tracked at the region level, creating reports or invoices for each geographic region.

Regions are visible to the end user. When a user starts a guest VM on a particular CloudStack Management Server, the user is implicitly selecting that region for their guest. Users might also be required to copy their private templates to additional regions to enable creation of guest VMs using their templates in those regions.

About Zones¶

A zone is the second largest organizational unit within a CloudStack deployment. A zone typically corresponds to a single datacenter, although it is permissible to have multiple zones in a datacenter. The benefit of organizing infrastructure into zones is to provide physical isolation and redundancy. For example, each zone can have its own power supply and network uplink, and the zones can be widely separated geographically (though this is not required).

A zone consists of:

- One or more pods. Each pod contains one or more clusters of hosts and one or more primary storage servers.

- A zone may contain one or more primary storage servers, which are shared by all the pods in the zone.

- Secondary storage, which is shared by all the pods in the zone.

Zones are visible to the end user. When a user starts a guest VM, the user must select a zone for their guest. Users might also be required to copy their private templates to additional zones to enable creation of guest VMs using their templates in those zones.

Zones can be public or private. Public zones are visible to all users. This means that any user may create a guest in that zone. Private zones are reserved for a specific domain. Only users in that domain or its subdomains may create guests in that zone.

Hosts in the same zone are directly accessible to each other without having to go through a firewall. Hosts in different zones can access each other through statically configured VPN tunnels.

For each zone, the administrator must decide the following.

- How many pods to place in each zone.

- How many clusters to place in each pod.

- How many hosts to place in each cluster.

- (Optional) How many primary storage servers to place in each zone and total capacity for these storage servers.

- How many primary storage servers to place in each cluster and total capacity for these storage servers.

- How much secondary storage to deploy in a zone.

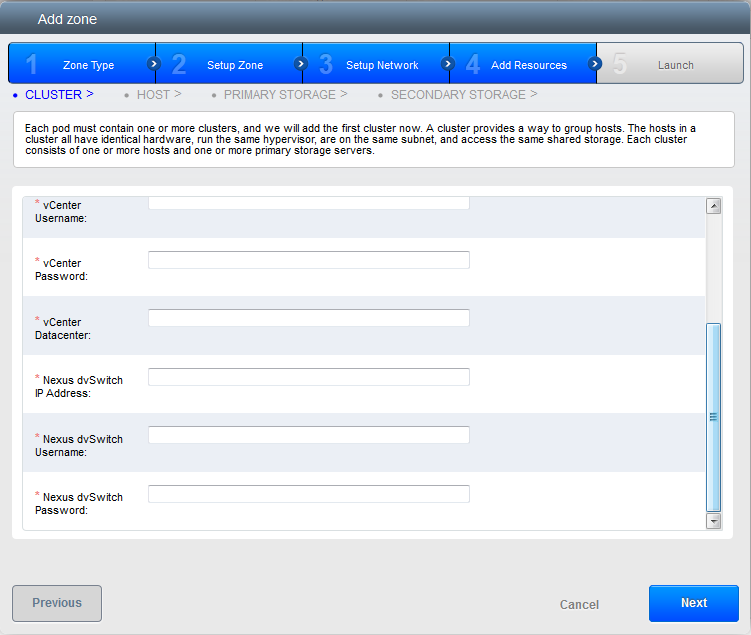

When you add a new zone using the CloudStack UI, you will be prompted to configure the zone’s physical network and add the first pod, cluster, host, primary storage, and secondary storage.

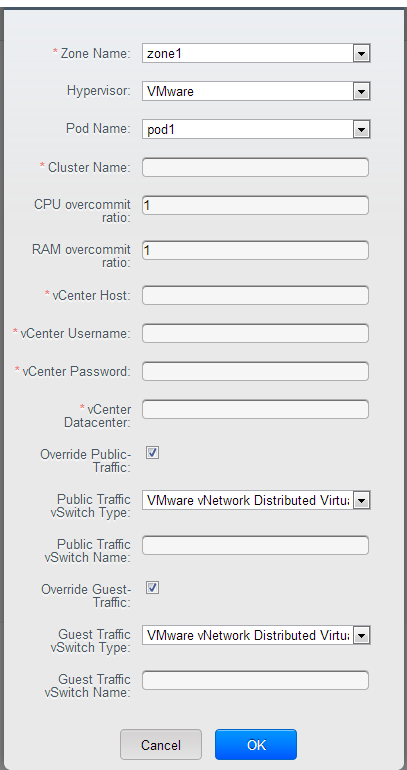

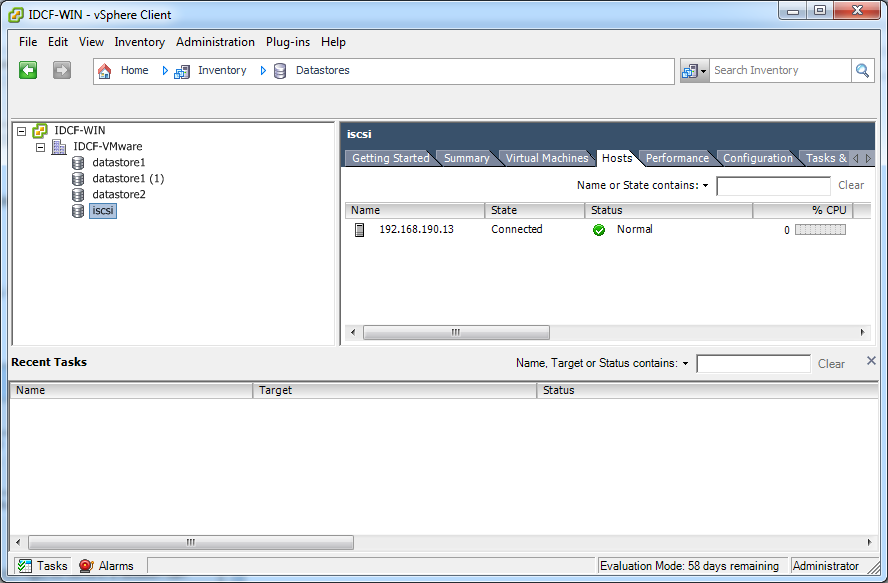

In order to support zone-wide functions for VMware, CloudStack is aware of VMware Datacenters and can map each Datacenter to a CloudStack zone. To enable features like storage live migration and zone-wide primary storage for VMware hosts, CloudStack has to make sure that a zone contains only a single VMware Datacenter. Therefore, when you are creating a new CloudStack zone, you can select a VMware Datacenter for the zone. If you are provisioning multiple VMware Datacenters, each one will be set up as a single zone in CloudStack.

Note

If you are upgrading from a previous CloudStack version, and your existing deployment contains a zone with clusters from multiple VMware Datacenters, that zone will not be forcibly migrated to the new model. It will continue to function as before. However, any new zone-wide operations, such as zone-wide primary storage and live storage migration, will not be available in that zone.

About Pods¶

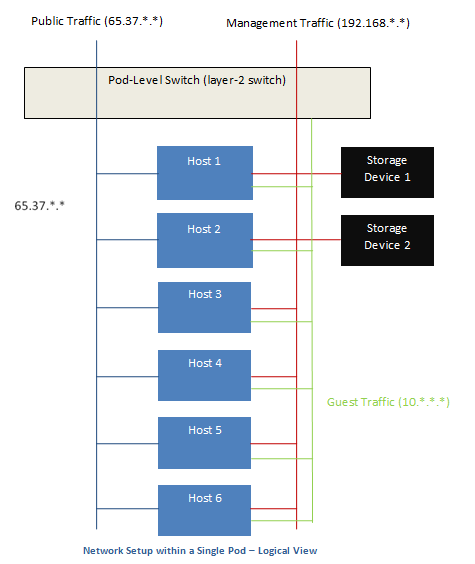

A pod often represents a single rack. Hosts in the same pod are in the same subnet. A pod is the third-largest organizational unit within a CloudStack deployment. Pods are contained within zones. Each zone can contain one or more pods. A pod consists of one or more clusters of hosts and one or more primary storage servers. Pods are not visible to the end user.

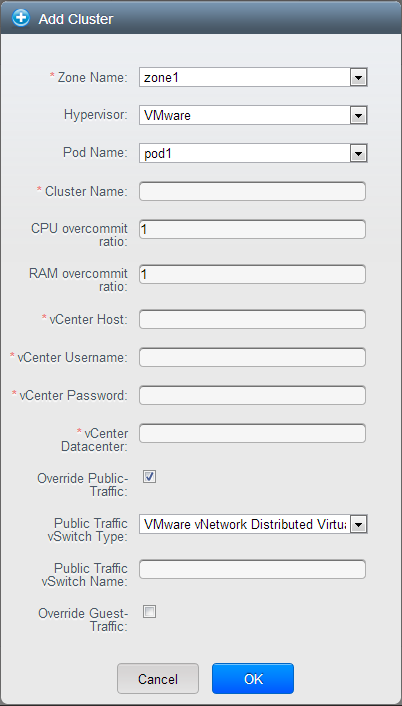

About Clusters¶

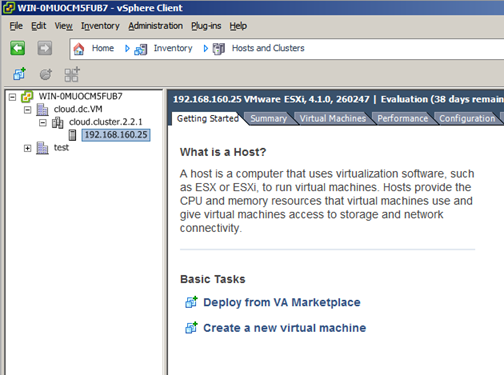

A cluster provides a way to group hosts. To be precise, a cluster is a XenServer server pool, a set of KVM servers, , or a VMware cluster preconfigured in vCenter. The hosts in a cluster all have identical hardware, run the same hypervisor, are on the same subnet, and access the same shared primary storage. Virtual machine instances (VMs) can be live-migrated from one host to another within the same cluster, without interrupting service to the user.

A cluster is the fourth-largest organizational unit within a CloudStack deployment. Clusters are contained within pods, and pods are contained within zones. Size of the cluster is limited by the underlying hypervisor, although the CloudStack recommends less in most cases; see Best Practices.

A cluster consists of one or more hosts and one or more primary storage servers.

CloudStack allows multiple clusters in a cloud deployment.

Even when local storage is used exclusively, clusters are still required organizationally, even if there is just one host per cluster.

When VMware is used, every VMware cluster is managed by a vCenter server. An Administrator must register the vCenter server with CloudStack. There may be multiple vCenter servers per zone. Each vCenter server may manage multiple VMware clusters.

About Hosts¶

A host is a single computer. Hosts provide the computing resources that run guest virtual machines. Each host has hypervisor software installed on it to manage the guest VMs. For example, a host can be a Citrix XenServer server, a Linux KVM-enabled server, an ESXi server, or a Windows Hyper-V server.

The host is the smallest organizational unit within a CloudStack deployment. Hosts are contained within clusters, clusters are contained within pods, pods are contained within zones, and zones can be contained within regions.

Hosts in a CloudStack deployment:

- Provide the CPU, memory, storage, and networking resources needed to host the virtual machines

- Interconnect using a high bandwidth TCP/IP network and connect to the Internet

- May reside in multiple data centers across different geographic locations

- May have different capacities (different CPU speeds, different amounts of RAM, etc.), although the hosts within a cluster must all be homogeneous

Additional hosts can be added at any time to provide more capacity for guest VMs.

CloudStack automatically detects the amount of CPU and memory resources provided by the hosts.

Hosts are not visible to the end user. An end user cannot determine which host their guest has been assigned to.

For a host to function in CloudStack, you must do the following:

- Install hypervisor software on the host

- Assign an IP address to the host

- Ensure the host is connected to the CloudStack Management Server.

About Primary Storage¶

Primary storage is associated with a cluster, and it stores virtual disks for all the VMs running on hosts in that cluster. On KVM and VMware, you can provision primary storage on a per-zone basis.

You can add multiple primary storage servers to a cluster or zone. At least one is required. It is typically located close to the hosts for increased performance. CloudStack manages the allocation of guest virtual disks to particular primary storage devices.

It is useful to set up zone-wide primary storage when you want to avoid extra data copy operations. With cluster-based primary storage, data in the primary storage is directly available only to VMs within that cluster. If a VM in a different cluster needs some of the data, it must be copied from one cluster to another, using the zone’s secondary storage as an intermediate step. This operation can be unnecessarily time-consuming.

For Hyper-V, SMB/CIFS storage is supported. Note that Zone-wide Primary Storage is not supported in Hyper-V.

Ceph/RBD storage is only supported by the KVM hypervisor. It can be used as Zone-wide Primary Storage.

CloudStack is designed to work with all standards-compliant iSCSI and NFS servers that are supported by the underlying hypervisor, including, for example:

- SolidFire for iSCSI

- Dell EqualLogic™ for iSCSI

- Network Appliances filers for NFS and iSCSI

- Scale Computing for NFS

If you intend to use only local disk for your installation, you can skip adding separate primary storage.

About Secondary Storage¶

Secondary storage stores the following:

- Templates — OS images that can be used to boot VMs and can include additional configuration information, such as installed applications

- ISO images — disc images containing data or bootable media for operating systems

- Disk volume snapshots — saved copies of VM data which can be used for data recovery or to create new templates

The items in secondary storage are available to all hosts in the scope of the secondary storage, which may be defined as per zone or per region.

To make items in secondary storage available to all hosts throughout the cloud, you can add object storage in addition to the zone-based NFS Secondary Staging Store. It is not necessary to copy templates and snapshots from one zone to another, as would be required when using zone NFS alone. Everything is available everywhere.

For Hyper-V hosts, SMB/CIFS storage is supported.

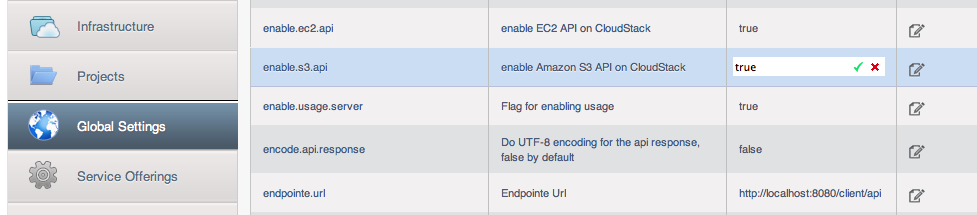

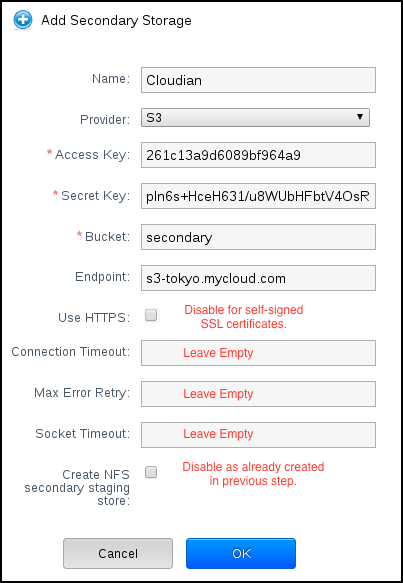

CloudStack provides plugins that enable both OpenStack Object Storage (Swift, swift.openstack.org) and Amazon Simple Storage Service (S3) object storage. When using one of these storage plugins, you configure Swift or S3 storage for the entire CloudStack, then set up the NFS Secondary Staging Store for each zone. The NFS storage in each zone acts as a staging area through which all templates and other secondary storage data pass before being forwarded to Swift or S3. The backing object storage acts as a cloud-wide resource, making templates and other data available to any zone in the cloud.

Warning

Heterogeneous Secondary Storage is not supported in Regions. For example, you cannot set up multiple zones, one using NFS secondary and the other using S3 or Swift secondary.

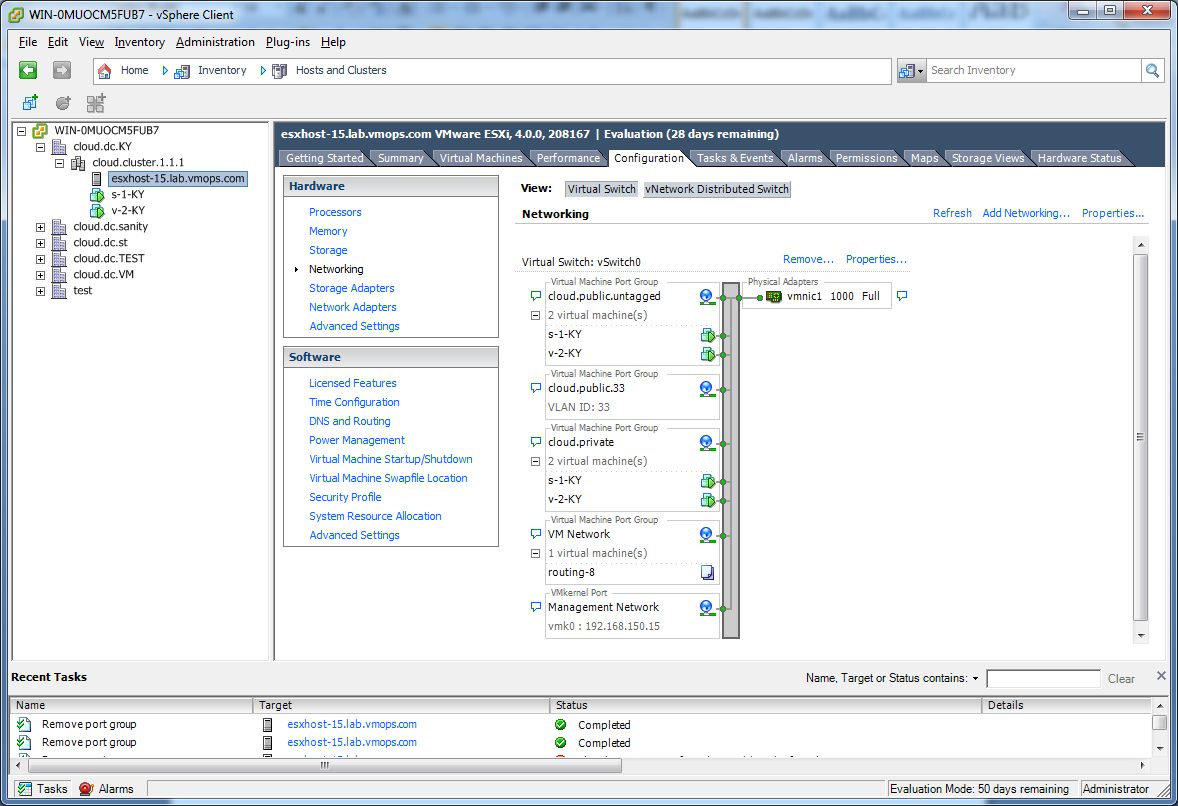

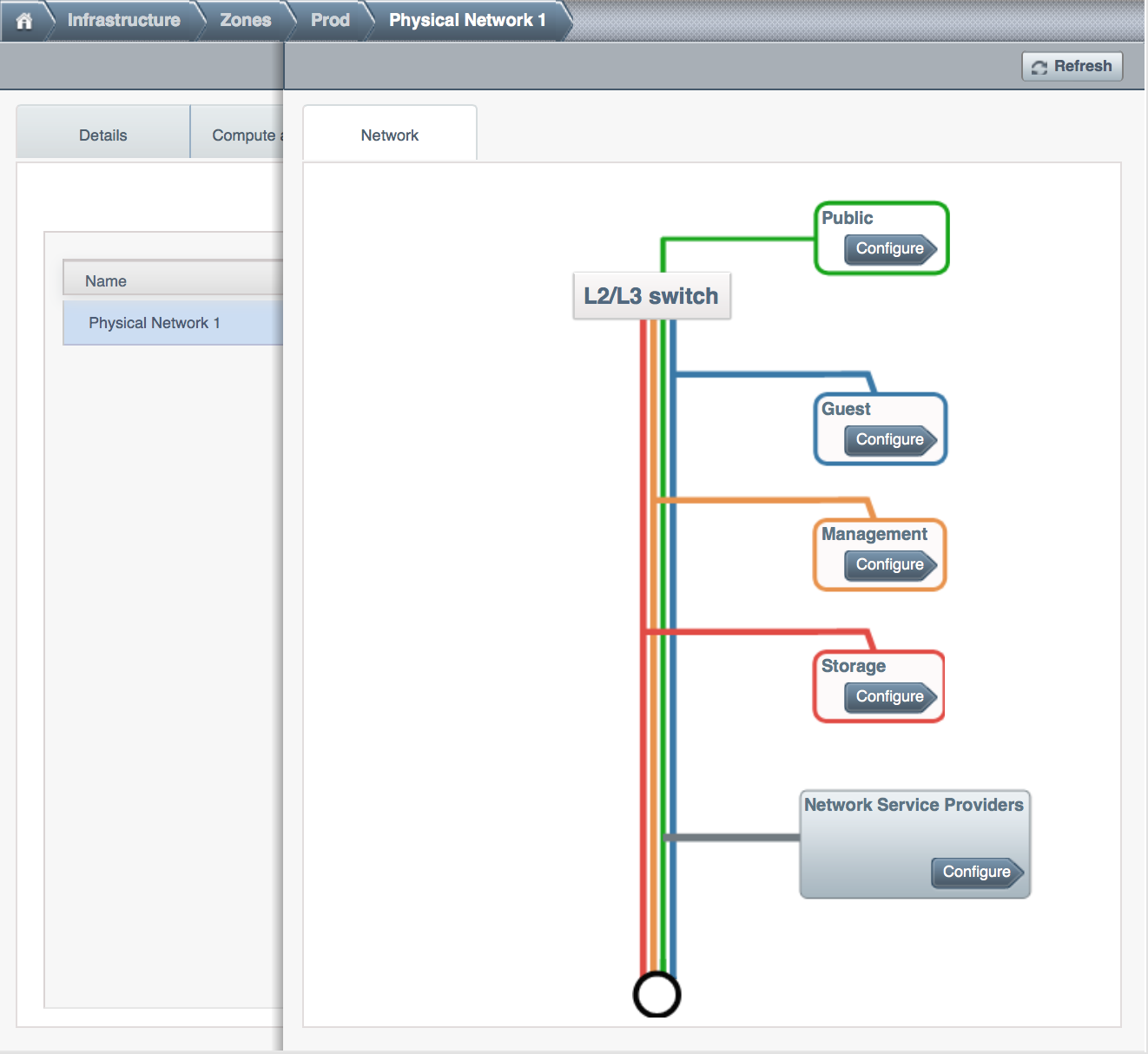

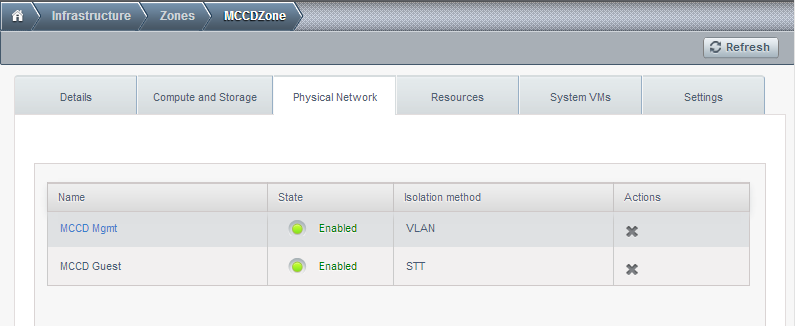

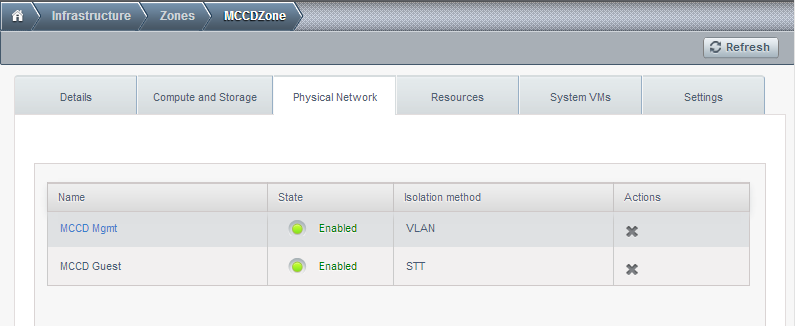

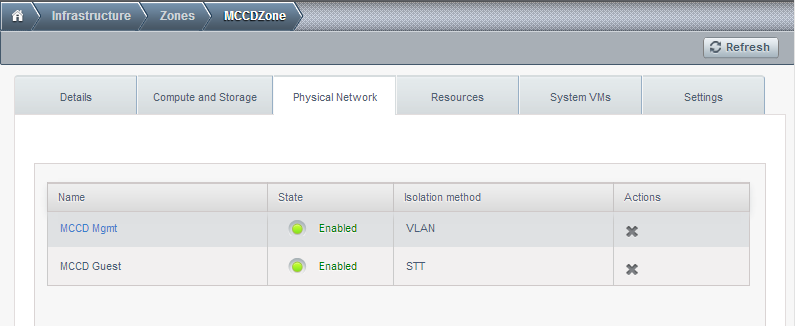

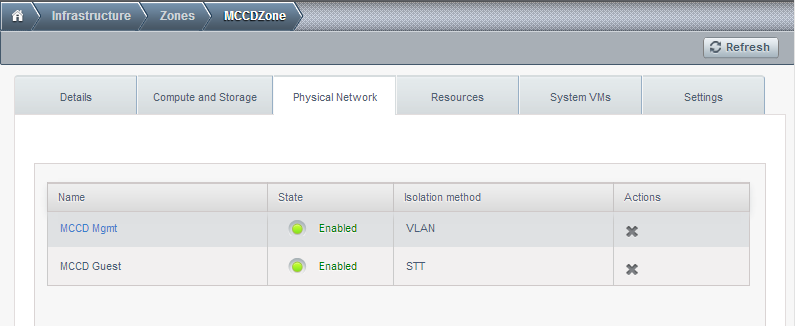

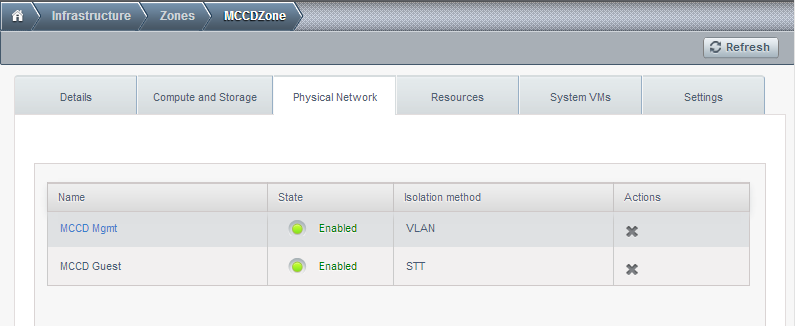

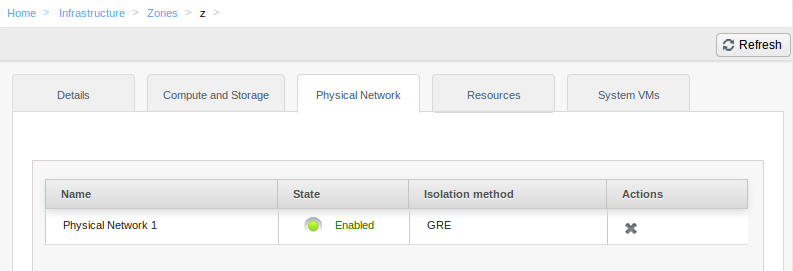

About Physical Networks¶

Part of adding a zone is setting up the physical network. One or (in an advanced zone) more physical networks can be associated with each zone. The network corresponds to a NIC on the hypervisor host. Each physical network can carry one or more types of network traffic. The choices of traffic type for each network vary depending on whether you are creating a zone with basic networking or advanced networking.

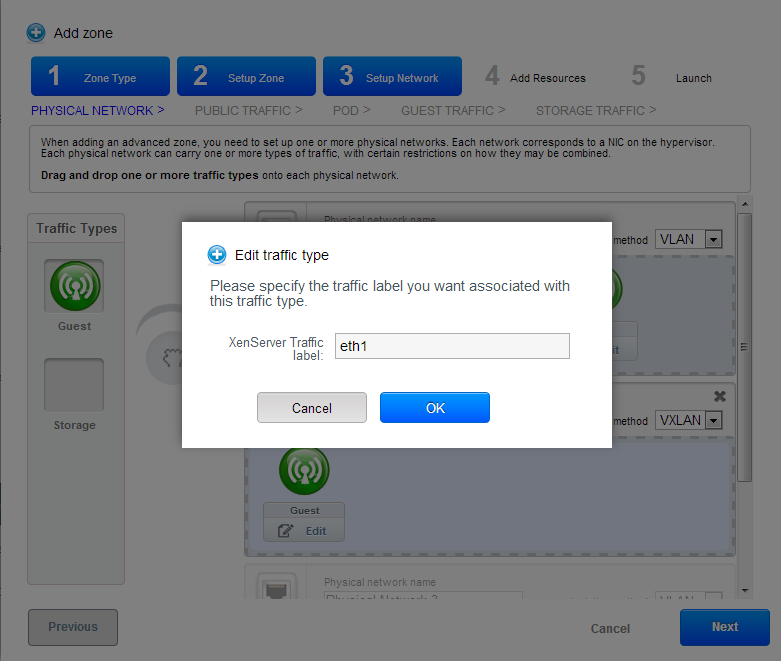

A physical network is the actual network hardware and wiring in a zone. A zone can have multiple physical networks. An administrator can:

- Add/Remove/Update physical networks in a zone

- Configure VLANs on the physical network

- Configure a name so the network can be recognized by hypervisors

- Configure the service providers (firewalls, load balancers, etc.) available on a physical network

- Configure the IP addresses trunked to a physical network

- Specify what type of traffic is carried on the physical network, as well as other properties like network speed

Basic Zone Network Traffic Types¶

When basic networking is used, there can be only one physical network in the zone. That physical network carries the following traffic types:

- Guest. When end users run VMs, they generate guest traffic. The guest VMs communicate with each other over a network that can be referred to as the guest network. Each pod in a basic zone is a broadcast domain, and therefore each pod has a different IP range for the guest network. The administrator must configure the IP range for each pod.

- Management. When CloudStack’s internal resources communicate with each other, they generate management traffic. This includes communication between hosts, system VMs (VMs used by CloudStack to perform various tasks in the cloud), and any other component that communicates directly with the CloudStack Management Server. You must configure the IP range for the system VMs to use.

Note

We strongly recommend the use of separate NICs for management traffic and guest traffic.

- Public. Public traffic is generated when VMs in the cloud access the Internet. Publicly accessible IPs must be allocated for this purpose. End users can use the CloudStack UI to acquire these IPs to implement NAT between their guest network and the public network, as described in Acquiring a New IP Address.

- Storage. While labeled “storage” this is specifically about secondary storage, and doesn’t affect traffic for primary storage. This includes traffic such as VM templates and snapshots, which is sent between the secondary storage VM and secondary storage servers. CloudStack uses a separate Network Interface Controller (NIC) named storage NIC for storage network traffic. Use of a storage NIC that always operates on a high bandwidth network allows fast template and snapshot copying. You must configure the IP range to use for the storage network.

In a basic network, configuring the physical network is fairly straightforward. In most cases, you only need to configure one guest network to carry traffic that is generated by guest VMs. If you use a NetScaler load balancer and enable its elastic IP and elastic load balancing (EIP and ELB) features, you must also configure a network to carry public traffic. CloudStack takes care of presenting the necessary network configuration steps to you in the UI when you add a new zone.

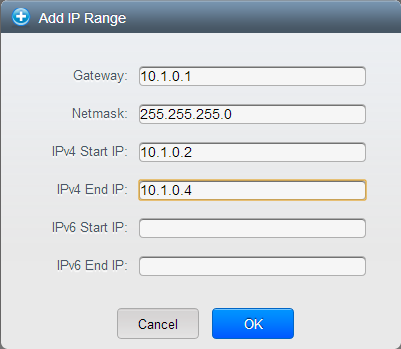

Basic Zone Guest IP Addresses¶

When basic networking is used, CloudStack will assign IP addresses in the CIDR of the pod to the guests in that pod. The administrator must add a Direct IP range on the pod for this purpose. These IPs are in the same VLAN as the hosts.

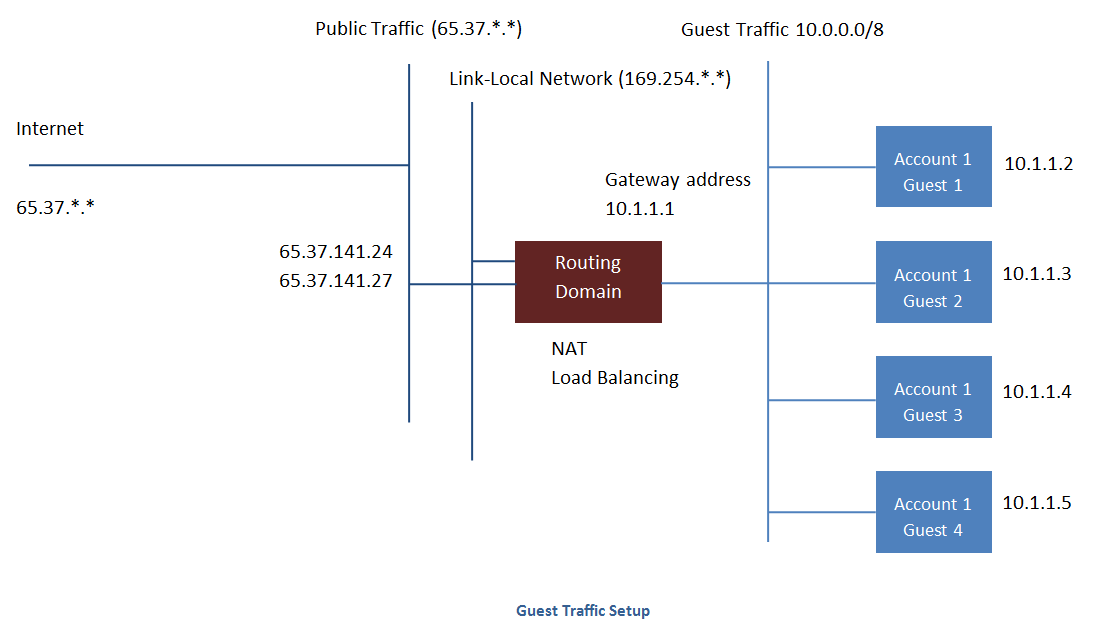

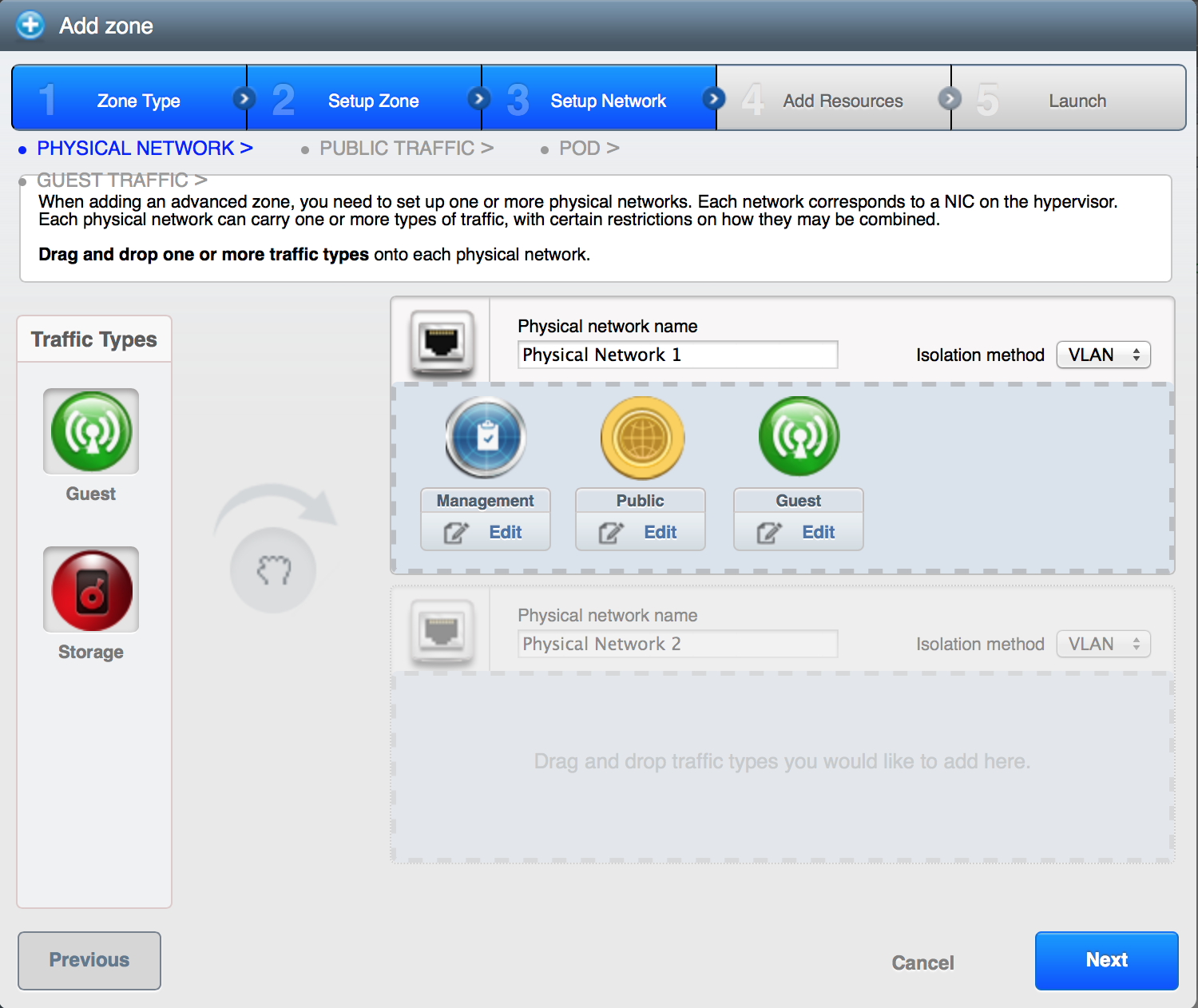

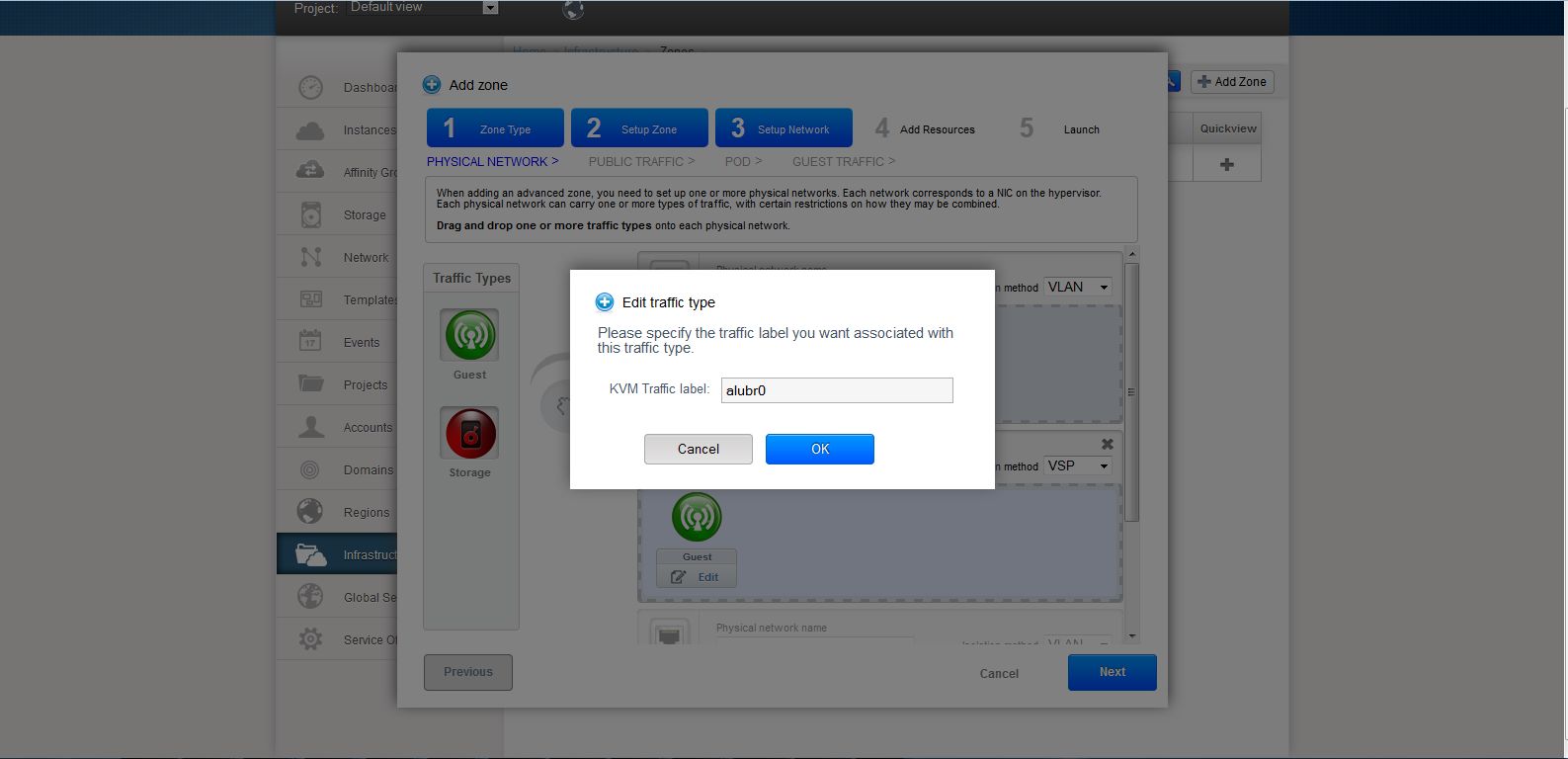

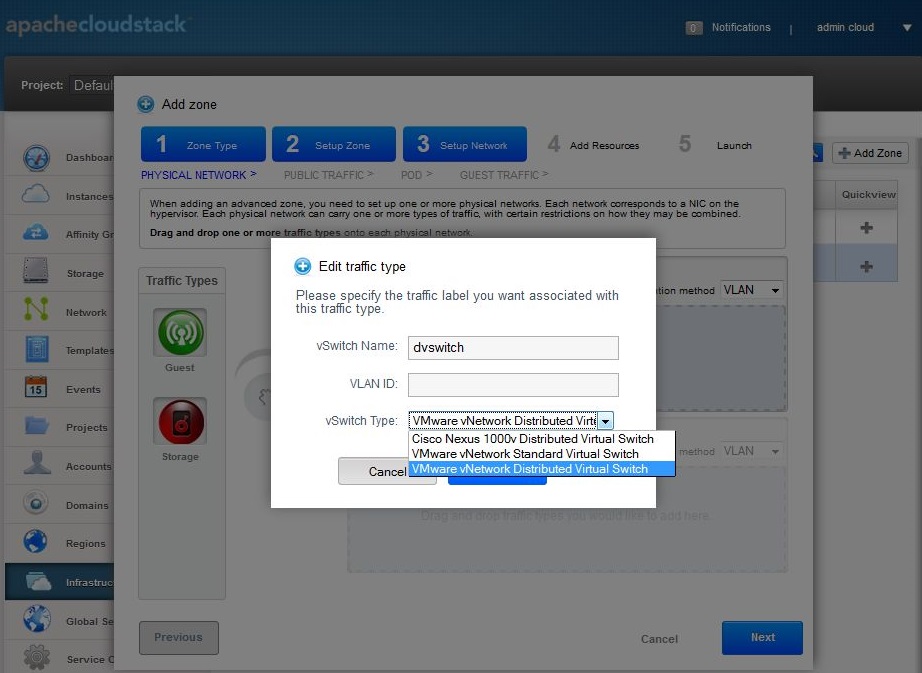

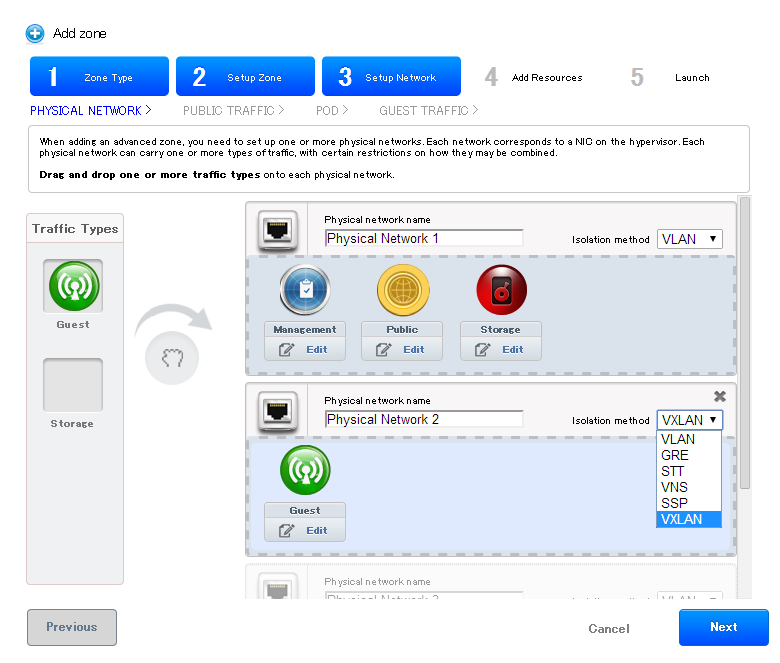

Advanced Zone Network Traffic Types¶

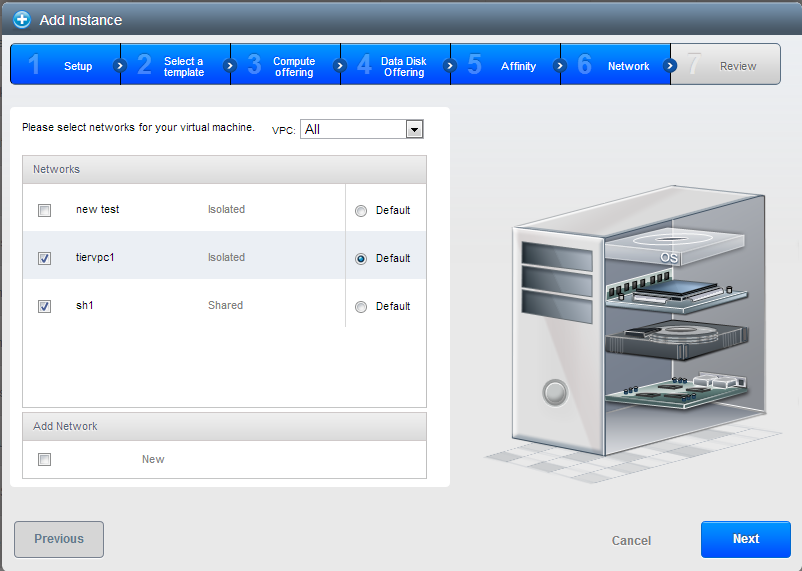

When advanced networking is used, there can be multiple physical networks in the zone. Each physical network can carry one or more traffic types, and you need to let CloudStack know which type of network traffic you want each network to carry. The traffic types in an advanced zone are:

- Guest. When end users run VMs, they generate guest traffic. The guest VMs communicate with each other over a network that can be referred to as the guest network. This network can be isolated or shared. In an isolated guest network, the administrator needs to reserve VLAN ranges to provide isolation for each CloudStack account’s network (potentially a large number of VLANs). In a shared guest network, all guest VMs share a single network.

- Management. When CloudStack’s internal resources communicate with each other, they generate management traffic. This includes communication between hosts, system VMs (VMs used by CloudStack to perform various tasks in the cloud), and any other component that communicates directly with the CloudStack Management Server. You must configure the IP range for the system VMs to use.

- Public. Public traffic is generated when VMs in the cloud access the Internet. Publicly accessible IPs must be allocated for this purpose. End users can use the CloudStack UI to acquire these IPs to implement NAT between their guest network and the public network, as described in “Acquiring a New IP Address” in the Administration Guide.

- Storage. While labeled “storage” this is specifically about secondary storage, and doesn’t affect traffic for primary storage. This includes traffic such as VM templates and snapshots, which is sent between the secondary storage VM and secondary storage servers. CloudStack uses a separate Network Interface Controller (NIC) named storage NIC for storage network traffic. Use of a storage NIC that always operates on a high bandwidth network allows fast template and snapshot copying. You must configure the IP range to use for the storage network.

These traffic types can each be on a separate physical network, or they can be combined with certain restrictions. When you use the Add Zone wizard in the UI to create a new zone, you are guided into making only valid choices.

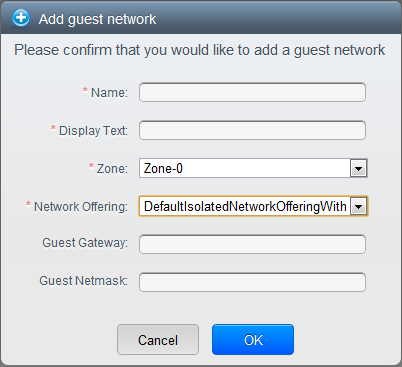

Advanced Zone Guest IP Addresses¶

When advanced networking is used, the administrator can create additional networks for use by the guests. These networks can span the zone and be available to all accounts, or they can be scoped to a single account, in which case only the named account may create guests that attach to these networks. The networks are defined by a VLAN ID, IP range, and gateway. The administrator may provision thousands of these networks if desired. Additionally, the administrator can reserve a part of the IP address space for non-CloudStack VMs and servers.

Advanced Zone Public IP Addresses¶

When advanced networking is used, the administrator can create additional networks for use by the guests. These networks can span the zone and be available to all accounts, or they can be scoped to a single account, in which case only the named account may create guests that attach to these networks. The networks are defined by a VLAN ID, IP range, and gateway. The administrator may provision thousands of these networks if desired.

System Reserved IP Addresses¶

In each zone, you need to configure a range of reserved IP addresses for the management network. This network carries communication between the CloudStack Management Server and various system VMs, such as Secondary Storage VMs, Console Proxy VMs, and DHCP.

The reserved IP addresses must be unique across the cloud. You cannot, for example, have a host in one zone which has the same private IP address as a host in another zone.

The hosts in a pod are assigned private IP addresses. These are typically RFC1918 addresses. The Console Proxy and Secondary Storage system VMs are also allocated private IP addresses in the CIDR of the pod that they are created in.

Make sure computing servers and Management Servers use IP addresses outside of the System Reserved IP range. For example, suppose the System Reserved IP range starts at 192.168.154.2 and ends at 192.168.154.7. CloudStack can use .2 to .7 for System VMs. This leaves the rest of the pod CIDR, from .8 to .254, for the Management Server and hypervisor hosts.

In all zones:

Provide private IPs for the system in each pod and provision them in CloudStack.

For KVM and XenServer, the recommended number of private IPs per pod is one per host. If you expect a pod to grow, add enough private IPs now to accommodate the growth.

In a zone that uses advanced networking:

For zones with advanced networking, we recommend provisioning enough private IPs for your total number of customers, plus enough for the required CloudStack System VMs. Typically, about 10 additional IPs are required for the System VMs. For more information about System VMs, see the section on working with SystemVMs in the Administrator’s Guide.

When advanced networking is being used, the number of private IP addresses available in each pod varies depending on which hypervisor is running on the nodes in that pod. Citrix XenServer and KVM use link-local addresses, which in theory provide more than 65,000 private IP addresses within the address block. As the pod grows over time, this should be more than enough for any reasonable number of hosts as well as IP addresses for guest virtual routers. VMWare ESXi, by contrast uses any administrator-specified subnetting scheme, and the typical administrator provides only 255 IPs per pod. Since these are shared by physical machines, the guest virtual router, and other entities, it is possible to run out of private IPs when scaling up a pod whose nodes are running ESXi.

To ensure adequate headroom to scale private IP space in an ESXi pod that uses advanced networking, use one or both of the following techniques:

- Specify a larger CIDR block for the subnet. A subnet mask with a /20 suffix will provide more than 4,000 IP addresses.

- Create multiple pods, each with its own subnet. For example, if you create 10 pods and each pod has 255 IPs, this will provide 2,550 IP addresses.

Choosing a Deployment Architecture¶

The architecture used in a deployment will vary depending on the size and purpose of the deployment. This section contains examples of deployment architecture, including a small-scale deployment useful for test and trial deployments and a fully-redundant large-scale setup for production deployments.

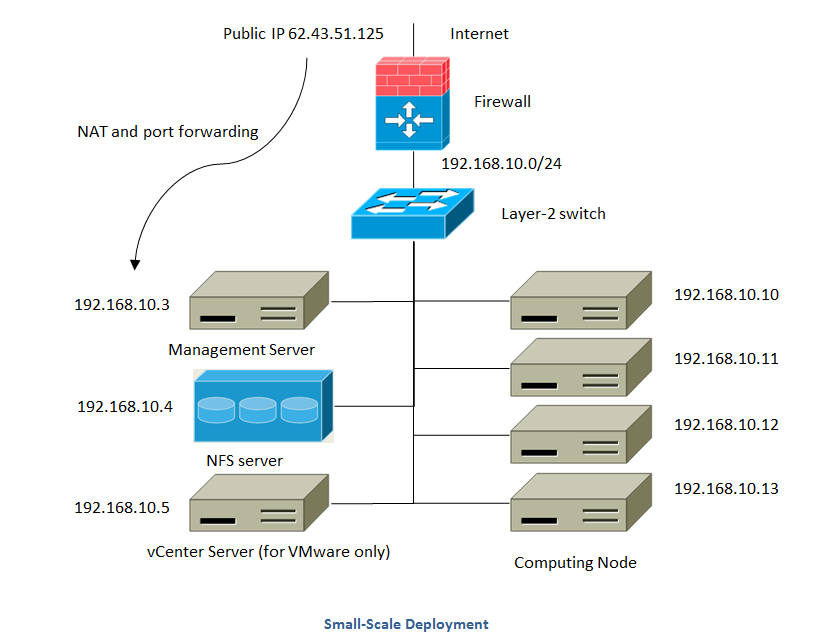

Small-Scale Deployment¶

This diagram illustrates the network architecture of a small-scale CloudStack deployment.

- A firewall provides a connection to the Internet. The firewall is configured in NAT mode. The firewall forwards HTTP requests and API calls from the Internet to the Management Server. The Management Server resides on the management network.

- A layer-2 switch connects all physical servers and storage.

- A single NFS server functions as both the primary and secondary storage.

- The Management Server is connected to the management network.

Large-Scale Redundant Setup¶

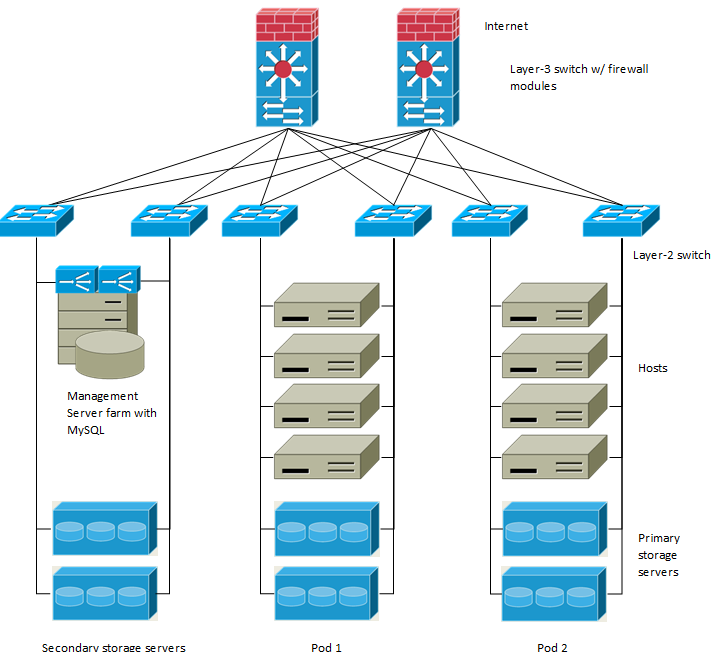

This diagram illustrates the network architecture of a large-scale CloudStack deployment.

- A layer-3 switching layer is at the core of the data center. A router

redundancy protocol like VRRP should be deployed. Typically high-end

core switches also include firewall modules. Separate firewall

appliances may also be used if the layer-3 switch does not have

integrated firewall capabilities. The firewalls are configured in NAT

mode. The firewalls provide the following functions:

- Forwards HTTP requests and API calls from the Internet to the Management Server. The Management Server resides on the management network.

- When the cloud spans multiple zones, the firewalls should enable site-to-site VPN such that servers in different zones can directly reach each other.

- A layer-2 access switch layer is established for each pod. Multiple switches can be stacked to increase port count. In either case, redundant pairs of layer-2 switches should be deployed.

- The Management Server cluster (including front-end load balancers, Management Server nodes, and the MySQL database) is connected to the management network through a pair of load balancers.

- Secondary storage servers are connected to the management network.

- Each pod contains storage and computing servers. Each storage and computing server should have redundant NICs connected to separate layer-2 access switches.

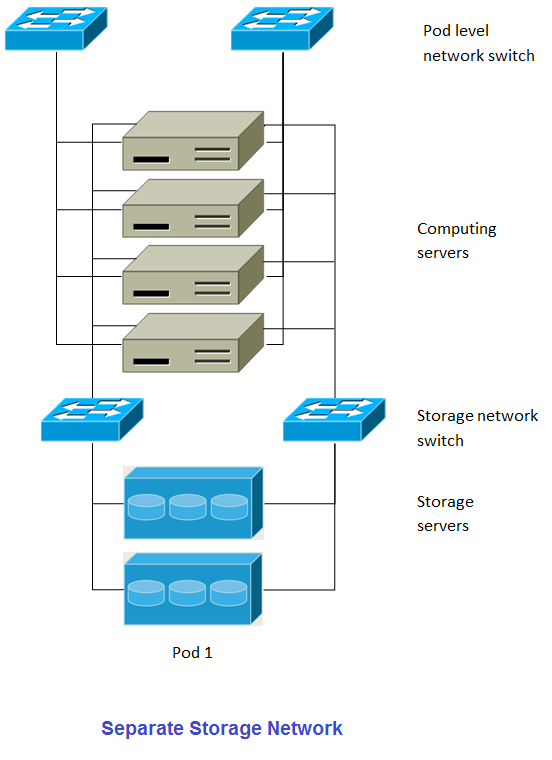

Separate Storage Network¶

In the large-scale redundant setup described in the previous section, storage traffic can overload the management network. A separate storage network is optional for deployments. Storage protocols such as iSCSI are sensitive to network delays. A separate storage network ensures guest network traffic contention does not impact storage performance.

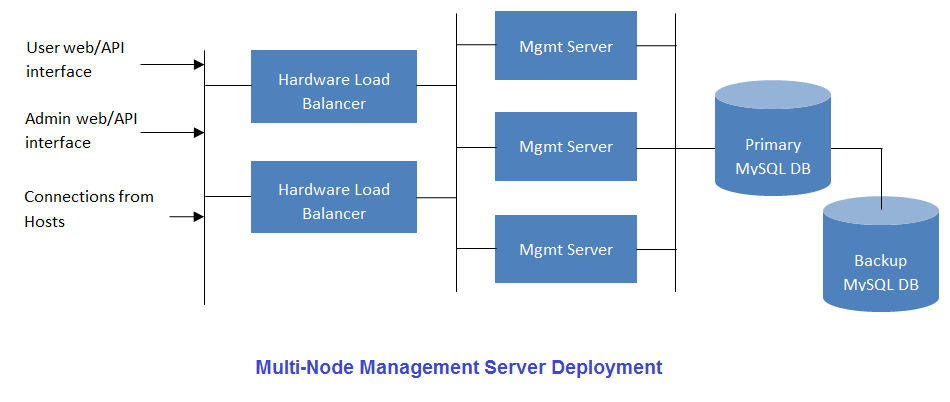

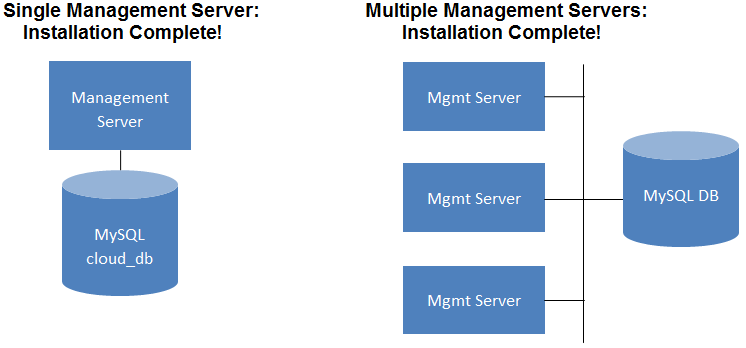

Multi-Node Management Server¶

The CloudStack Management Server is deployed on one or more front-end servers connected to a single MySQL database. Optionally a pair of hardware load balancers distributes requests from the web. A backup management server set may be deployed using MySQL replication at a remote site to add DR capabilities.

The administrator must decide the following.

- Whether or not load balancers will be used.

- How many Management Servers will be deployed.

- Whether MySQL replication will be deployed to enable disaster recovery.

Multi-Site Deployment¶

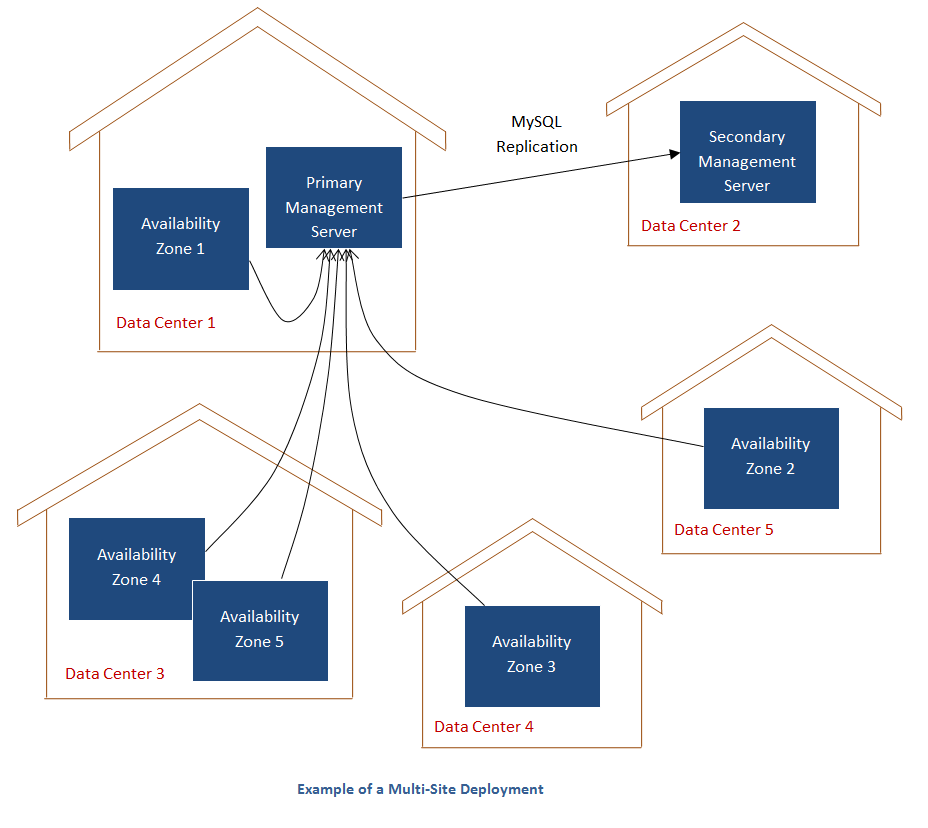

The CloudStack platform scales well into multiple sites through the use of zones. The following diagram shows an example of a multi-site deployment.

Data Center 1 houses the primary Management Server as well as zone 1. The MySQL database is replicated in real time to the secondary Management Server installation in Data Center 2.

This diagram illustrates a setup with a separate storage network. Each server has four NICs, two connected to pod-level network switches and two connected to storage network switches.

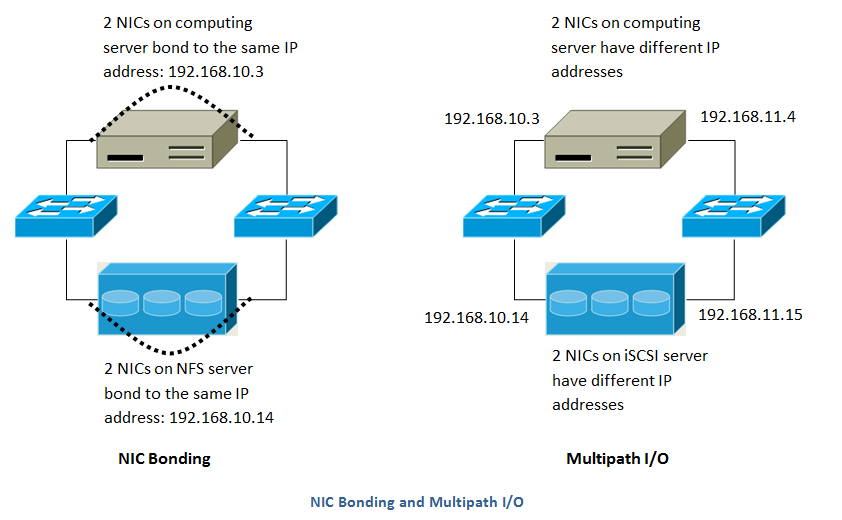

There are two ways to configure the storage network:

- Bonded NIC and redundant switches can be deployed for NFS. In NFS deployments, redundant switches and bonded NICs still result in one network (one CIDR block+ default gateway address).

- iSCSI can take advantage of two separate storage networks (two CIDR blocks each with its own default gateway). Multipath iSCSI client can failover and load balance between separate storage networks.

This diagram illustrates the differences between NIC bonding and Multipath I/O (MPIO). NIC bonding configuration involves only one network. MPIO involves two separate networks.

Choosing a Hypervisor¶

CloudStack supports many popular hypervisors. Your cloud can consist entirely of hosts running a single hypervisor, or you can use multiple hypervisors. Each cluster of hosts must run the same hypervisor.

You might already have an installed base of nodes running a particular hypervisor, in which case, your choice of hypervisor has already been made. If you are starting from scratch, you need to decide what hypervisor software best suits your needs. A discussion of the relative advantages of each hypervisor is outside the scope of our documentation. However, it will help you to know which features of each hypervisor are supported by CloudStack. The following table provides this information.

| Feature | XenServer | vSphere | KVM - RHEL | LXC | HyperV | Bare Metal |

|---|---|---|---|---|---|---|

| Network Throttling | Yes | Yes | No | No | ? | N/A |

| Security groups in zones that use basic networking | Yes | No | Yes | Yes | ? | No |

| iSCSI | Yes | Yes | Yes | Yes | Yes | N/A |

| FibreChannel | Yes | Yes | Yes | Yes | Yes | N/A |

| Local Disk | Yes | Yes | Yes | Yes | Yes | Yes |

| HA | Yes | Yes (Native) | Yes | ? | Yes | N/A |

| Snapshots of local disk | Yes | Yes | Yes | ? | ? | N/A |

| Local disk as data disk | Yes | No | Yes | Yes | Yes | N/A |

| Work load balancing | No | DRS | No | No | ? | N/A |

| Manual live migration of VMs from host to host | Yes | Yes | Yes | ? | Yes | N/A |

| Conserve management traffic IP address by using link local network to communicate with virtual router | Yes | No | Yes | Yes | ? | N/A |

Hypervisor Support for Primary Storage¶

The following table shows storage options and parameters for different hypervisors.

| Primary Storage Type | XenServer | vSphere | KVM - RHEL | LXC | HyperV |

|---|---|---|---|---|---|

| Format for Disks, Templates, and Snapshots | VHD | VMDK | QCOW2 | VHD | |

| iSCSI support | CLVM | VMFS | Yes via Shared Mountpoint | Yes via Shared Mountpoint | No |

| Fiber Channel support | Yes, Via existing SR | VMFS | Yes via Shared Mountpoint | Yes via Shared Mountpoint | No |

| NFS support | Yes | Yes | Yes | Yes | No |

| Local storage support | Yes | Yes | Yes | Yes | Yes |

| Storage over-provisioning | NFS | NFS and iSCSI | NFS | No | |

| SMB/CIFS | No | No | No | No | Yes |

XenServer uses a clustered LVM system to store VM images on iSCSI and Fiber Channel volumes and does not support over-provisioning in the hypervisor. The storage server itself, however, can support thin-provisioning. As a result the CloudStack can still support storage over-provisioning by running on thin-provisioned storage volumes.

KVM supports “Shared Mountpoint” storage. A shared mountpoint is a file system path local to each server in a given cluster. The path must be the same across all Hosts in the cluster, for example /mnt/primary1. This shared mountpoint is assumed to be a clustered filesystem such as OCFS2. In this case the CloudStack does not attempt to mount or unmount the storage as is done with NFS. The CloudStack requires that the administrator insure that the storage is available

With NFS storage, CloudStack manages the overprovisioning. In this case the global configuration parameter storage.overprovisioning.factor controls the degree of overprovisioning. This is independent of hypervisor type.

Local storage is an option for primary storage for vSphere, XenServer, and KVM. When the local disk option is enabled, a local disk storage pool is automatically created on each host. To use local storage for the System Virtual Machines (such as the Virtual Router), set system.vm.use.local.storage to true in global configuration.

CloudStack supports multiple primary storage pools in a Cluster. For example, you could provision 2 NFS servers in primary storage. Or you could provision 1 iSCSI LUN initially and then add a second iSCSI LUN when the first approaches capacity.

Best Practices¶

Deploying a cloud is challenging. There are many different technology choices to make, and CloudStack is flexible enough in its configuration that there are many possible ways to combine and configure the chosen technology. This section contains suggestions and requirements about cloud deployments.

These should be treated as suggestions and not absolutes. However, we do encourage anyone planning to build a cloud outside of these guidelines to seek guidance and advice on the project mailing lists.

Process Best Practices¶

- A staging system that models the production environment is strongly advised. It is critical if customizations have been applied to CloudStack.

- Allow adequate time for installation, a beta, and learning the system. Installs with basic networking can be done in hours. Installs with advanced networking usually take several days for the first attempt, with complicated installations taking longer. For a full production system, allow at least 4-8 weeks for a beta to work through all of the integration issues. You can get help from fellow users on the cloudstack-users mailing list.

Setup Best Practices¶

- Each host should be configured to accept connections only from well-known entities such as the CloudStack Management Server or your network monitoring software.

- Use multiple clusters per pod if you need to achieve a certain switch density.

- Primary storage mountpoints or LUNs should not exceed 6 TB in size. It is better to have multiple smaller primary storage elements per cluster than one large one.

- When exporting shares on primary storage, avoid data loss by restricting the range of IP addresses that can access the storage. See “Linux NFS on Local Disks and DAS” or “Linux NFS on iSCSI”.

- NIC bonding is straightforward to implement and provides increased reliability.

- 10G networks are generally recommended for storage access when larger servers that can support relatively more VMs are used.

- Host capacity should generally be modeled in terms of RAM for the guests. Storage and CPU may be overprovisioned. RAM may not. RAM is usually the limiting factor in capacity designs.

- (XenServer) Configure the XenServer dom0 settings to allocate more memory to dom0. This can enable XenServer to handle larger numbers of virtual machines. We recommend 2940 MB of RAM for XenServer dom0. For instructions on how to do this, see http://support.citrix.com/article/CTX126531. The article refers to XenServer 5.6, but the same information applies to XenServer 6.0.

Maintenance Best Practices¶

- Monitor host disk space. Many host failures occur because the host’s root disk fills up from logs that were not rotated adequately.

- Monitor the total number of VM instances in each cluster, and disable allocation to the cluster if the total is approaching the maximum that the hypervisor can handle. Be sure to leave a safety margin to allow for the possibility of one or more hosts failing, which would increase the VM load on the other hosts as the VMs are redeployed. Consult the documentation for your chosen hypervisor to find the maximum permitted number of VMs per host, then use CloudStack global configuration settings to set this as the default limit. Monitor the VM activity in each cluster and keep the total number of VMs below a safe level that allows for the occasional host failure. For example, if there are N hosts in the cluster, and you want to allow for one host in the cluster to be down at any given time, the total number of VM instances you can permit in the cluster is at most (N-1) * (per-host-limit). Once a cluster reaches this number of VMs, use the CloudStack UI to disable allocation to the cluster.

Warning

The lack of up-do-date hotfixes can lead to data corruption and lost VMs.

Be sure all the hotfixes provided by the hypervisor vendor are applied. Track the release of hypervisor patches through your hypervisor vendor’s support channel, and apply patches as soon as possible after they are released. CloudStack will not track or notify you of required hypervisor patches. It is essential that your hosts are completely up to date with the provided hypervisor patches. The hypervisor vendor is likely to refuse to support any system that is not up to date with patches.

Network Setup¶

Achieving the correct networking setup is crucial to a successful CloudStack installation. This section contains information to help you make decisions and follow the right procedures to get your network set up correctly.

Basic and Advanced Networking¶

CloudStack provides two styles of networking:.

Basic For AWS-style networking. Provides a single network where guest isolation can be provided through layer-3 means such as security groups (IP address source filtering).

Advanced For more sophisticated network topologies. This network model provides the most flexibility in defining guest networks, but requires more configuration steps than basic networking.

Each zone has either basic or advanced networking. Once the choice of networking model for a zone has been made and configured in CloudStack, it can not be changed. A zone is either basic or advanced for its entire lifetime.

The following table compares the networking features in the two networking models.

| Networking Feature | Basic Network | Advanced Network |

|---|---|---|

| Number of networks | Single network | Multiple networks |

| Firewall type | Physical | Physical and Virtual |

| Load balancer | Physical | Physical and Virtual |

| Isolation type | Layer 3 | Layer 2 and Layer 3 |

| VPN support | No | Yes |

| Port forwarding | Physical | Physical and Virtual |

| 1:1 NAT | Physical | Physical and Virtual |

| Source NAT | No | Physical and Virtual |

| Userdata | Yes | Yes |

| Network usage monitoring | sFlow / netFlow at physical router | Hypervisor and Virtual Router |

| DNS and DHCP | Yes | Yes |

The two types of networking may be in use in the same cloud. However, a given zone must use either Basic Networking or Advanced Networking.

Different types of network traffic can be segmented on the same physical network. Guest traffic can also be segmented by account. To isolate traffic, you can use separate VLANs. If you are using separate VLANs on a single physical network, make sure the VLAN tags are in separate numerical ranges.

VLAN Allocation Example¶

VLANs are required for public and guest traffic. The following is an example of a VLAN allocation scheme:

| VLAN IDs | Traffic type | Scope |

|---|---|---|

| less than 500 | Management traffic. Reserved for administrative purposes. | CloudStack software can access this, hypervisors, system VMs. |

| 500-599 | VLAN carrying public traffic. | CloudStack accounts. |

| 600-799 | VLANs carrying guest traffic. | CloudStack accounts. Account-specific VLAN is chosen from this pool. |

| 800-899 | VLANs carrying guest traffic. | CloudStack accounts. Account-specific VLAN chosen by CloudStack admin to assign to that account. |

| 900-999 | VLAN carrying guest traffic | CloudStack accounts. Can be scoped by project, domain, or all accounts. |

| greater than 1000 | Reserved for future use |

Example Hardware Configuration¶

This section contains an example configuration of specific switch models for zone-level layer-3 switching. It assumes VLAN management protocols, such as VTP or GVRP, have been disabled. The example scripts must be changed appropriately if you choose to use VTP or GVRP.

Dell 62xx¶

The following steps show how a Dell 62xx is configured for zone-level layer-3 switching. These steps assume VLAN 201 is used to route untagged private IPs for pod 1, and pod 1’s layer-2 switch is connected to Ethernet port 1/g1.

The Dell 62xx Series switch supports up to 1024 VLANs.

Configure all the VLANs in the database.

vlan database vlan 200-999 exit

Configure Ethernet port 1/g1.

interface ethernet 1/g1 switchport mode general switchport general pvid 201 switchport general allowed vlan add 201 untagged switchport general allowed vlan add 300-999 tagged exit

The statements configure Ethernet port 1/g1 as follows:

- VLAN 201 is the native untagged VLAN for port 1/g1.

- All VLANs (300-999) are passed to all the pod-level layer-2 switches.

Cisco 3750¶

The following steps show how a Cisco 3750 is configured for zone-level layer-3 switching. These steps assume VLAN 201 is used to route untagged private IPs for pod 1, and pod 1’s layer-2 switch is connected to GigabitEthernet1/0/1.

Setting VTP mode to transparent allows us to utilize VLAN IDs above 1000. Since we only use VLANs up to 999, vtp transparent mode is not strictly required.

vtp mode transparent vlan 200-999 exit

Configure GigabitEthernet1/0/1.

interface GigabitEthernet1/0/1 switchport trunk encapsulation dot1q switchport mode trunk switchport trunk native vlan 201 exit

The statements configure GigabitEthernet1/0/1 as follows:

- VLAN 201 is the native untagged VLAN for port GigabitEthernet1/0/1.

- Cisco passes all VLANs by default. As a result, all VLANs (300-999) are passed to all the pod-level layer-2 switches.

Layer-2 Switch¶

The layer-2 switch is the access switching layer inside the pod.

- It should trunk all VLANs into every computing host.

- It should switch traffic for the management network containing computing and storage hosts. The layer-3 switch will serve as the gateway for the management network.

The following sections contain example configurations for specific switch models for pod-level layer-2 switching. It assumes VLAN management protocols such as VTP or GVRP have been disabled. The scripts must be changed appropriately if you choose to use VTP or GVRP.

Dell 62xx¶

The following steps show how a Dell 62xx is configured for pod-level layer-2 switching.

Configure all the VLANs in the database.

vlan database vlan 300-999 exit

VLAN 201 is used to route untagged private IP addresses for pod 1, and pod 1 is connected to this layer-2 switch.

interface range ethernet all switchport mode general switchport general allowed vlan add 300-999 tagged exit

The statements configure all Ethernet ports to function as follows:

- All ports are configured the same way.

- All VLANs (300-999) are passed through all the ports of the layer-2 switch.

Cisco 3750¶

The following steps show how a Cisco 3750 is configured for pod-level layer-2 switching.

Setting VTP mode to transparent allows us to utilize VLAN IDs above 1000. Since we only use VLANs up to 999, vtp transparent mode is not strictly required.

vtp mode transparent vlan 300-999 exit

Configure all ports to dot1q and set 201 as the native VLAN.

interface range GigabitEthernet 1/0/1-24 switchport trunk encapsulation dot1q switchport mode trunk switchport trunk native vlan 201 exit

By default, Cisco passes all VLANs. Cisco switches complain of the native VLAN IDs are different when 2 ports are connected together. That’s why you must specify VLAN 201 as the native VLAN on the layer-2 switch.

Hardware Firewall¶

All deployments should have a firewall protecting the management server; see Generic Firewall Provisions. Optionally, some deployments may also have a Juniper SRX firewall that will be the default gateway for the guest networks; see External Guest Firewall Integration for Juniper SRX (Optional)

Generic Firewall Provisions¶

The hardware firewall is required to serve two purposes:

- Protect the Management Servers. NAT and port forwarding should be configured to direct traffic from the public Internet to the Management Servers.

- Route management network traffic between multiple zones. Site-to-site VPN should be configured between multiple zones.

To achieve the above purposes you must set up fixed configurations for the firewall. Firewall rules and policies need not change as users are provisioned into the cloud. Any brand of hardware firewall that supports NAT and site-to-site VPN can be used.

External Guest Firewall Integration for Juniper SRX (Optional)¶

Note

Available only for guests using advanced networking.

CloudStack provides for direct management of the Juniper SRX series of firewalls. This enables CloudStack to establish static NAT mappings from public IPs to guest VMs, and to use the Juniper device in place of the virtual router for firewall services. You can have one or more Juniper SRX per zone. This feature is optional. If Juniper integration is not provisioned, CloudStack will use the virtual router for these services.

The Juniper SRX can optionally be used in conjunction with an external load balancer. External Network elements can be deployed in a side-by-side or inline configuration.

CloudStack requires the Juniper SRX firewall to be configured as follows:

Note

Supported SRX software version is 10.3 or higher.

Install your SRX appliance according to the vendor’s instructions.

Connect one interface to the management network and one interface to the public network. Alternatively, you can connect the same interface to both networks and a use a VLAN for the public network.

Make sure “vlan-tagging” is enabled on the private interface.

Record the public and private interface names. If you used a VLAN for the public interface, add a “.[VLAN TAG]” after the interface name. For example, if you are using ge-0/0/3 for your public interface and VLAN tag 301, your public interface name would be “ge-0/0/3.301”. Your private interface name should always be untagged because the CloudStack software automatically creates tagged logical interfaces.

Create a public security zone and a private security zone. By default, these will already exist and will be called “untrust” and “trust”. Add the public interface to the public zone and the private interface to the private zone. Note down the security zone names.

Make sure there is a security policy from the private zone to the public zone that allows all traffic.

Note the username and password of the account you want the CloudStack software to log in to when it is programming rules.

Make sure the “ssh” and “xnm-clear-text” system services are enabled.

If traffic metering is desired:

Create an incoming firewall filter and an outgoing firewall filter. These filters should be the same names as your public security zone name and private security zone name respectively. The filters should be set to be “interface-specific”. For example, here is the configuration where the public zone is “untrust” and the private zone is “trust”:

root@cloud-srx# show firewall filter trust { interface-specific; } filter untrust { interface-specific; }

Add the firewall filters to your public interface. For example, a sample configuration output (for public interface ge-0/0/3.0, public security zone untrust, and private security zone trust) is:

ge-0/0/3 { unit 0 { family inet { filter { input untrust; output trust; } address 172.25.0.252/16; } } }

Make sure all VLANs are brought to the private interface of the SRX.

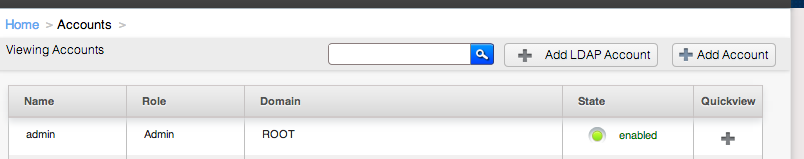

After the CloudStack Management Server is installed, log in to the CloudStack UI as administrator.

In the left navigation bar, click Infrastructure.

In Zones, click View More.

Choose the zone you want to work with.

Click the Network tab.

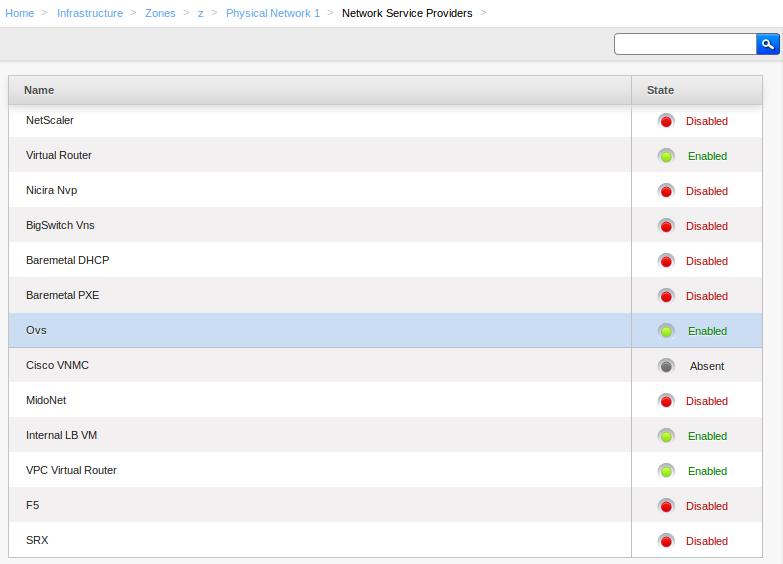

In the Network Service Providers node of the diagram, click Configure. (You might have to scroll down to see this.)

Click SRX.

Click the Add New SRX button (+) and provide the following:

- IP Address: The IP address of the SRX.

- Username: The user name of the account on the SRX that CloudStack should use.

- Password: The password of the account.

- Public Interface. The name of the public interface on the SRX. For example, ge-0/0/2. A “.x” at the end of the interface indicates the VLAN that is in use.

- Private Interface: The name of the private interface on the SRX. For example, ge-0/0/1.

- Usage Interface: (Optional) Typically, the public interface is used to meter traffic. If you want to use a different interface, specify its name here

- Number of Retries: The number of times to attempt a command on the SRX before failing. The default value is 2.

- Timeout (seconds): The time to wait for a command on the SRX before considering it failed. Default is 300 seconds.

- Public Network: The name of the public network on the SRX. For example, trust.

- Private Network: The name of the private network on the SRX. For example, untrust.

- Capacity: The number of networks the device can handle

- Dedicated: When marked as dedicated, this device will be dedicated to a single account. When Dedicated is checked, the value in the Capacity field has no significance implicitly, its value is 1

Click OK.

Click Global Settings. Set the parameter external.network.stats.interval to indicate how often you want CloudStack to fetch network usage statistics from the Juniper SRX. If you are not using the SRX to gather network usage statistics, set to 0.

External Guest Firewall Integration for Cisco VNMC (Optional)¶

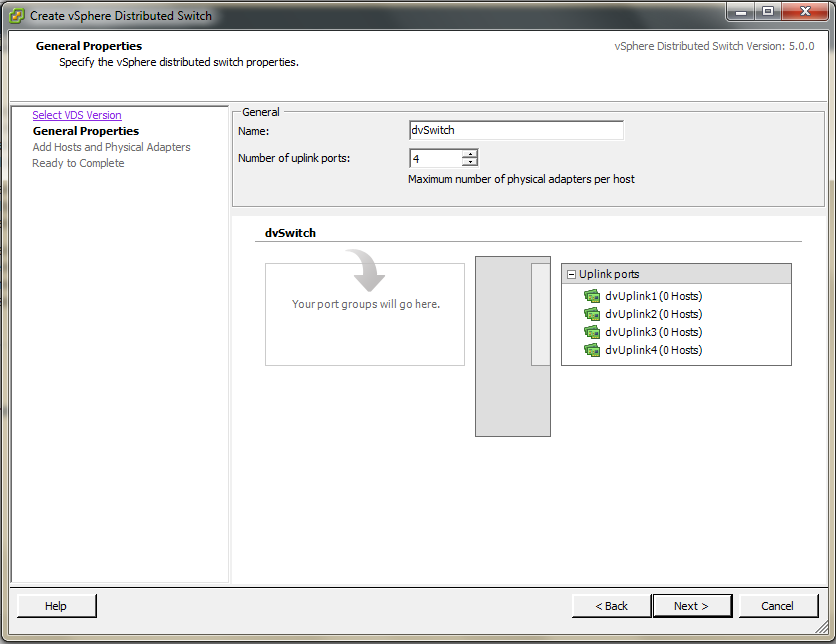

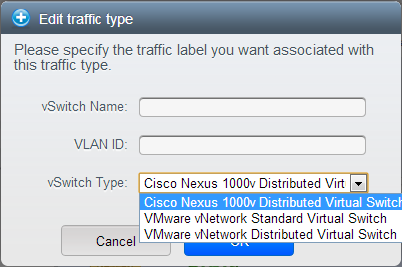

Cisco Virtual Network Management Center (VNMC) provides centralized multi-device and policy management for Cisco Network Virtual Services. You can integrate Cisco VNMC with CloudStack to leverage the firewall and NAT service offered by ASA 1000v Cloud Firewall. Use it in a Cisco Nexus 1000v dvSwitch-enabled cluster in CloudStack. In such a deployment, you will be able to:

- Configure Cisco ASA 1000v firewalls. You can configure one per guest network.

- Use Cisco ASA 1000v firewalls to create and apply security profiles that contain ACL policy sets for both ingress and egress traffic.

- Use Cisco ASA 1000v firewalls to create and apply Source NAT, Port Forwarding, and Static NAT policy sets.

CloudStack supports Cisco VNMC on Cisco Nexus 1000v dvSwich-enabled VMware hypervisors.

Using Cisco ASA 1000v Firewall, Cisco Nexus 1000v dvSwitch, and Cisco VNMC in a Deployment¶

Cisco ASA 1000v firewall is supported only in Isolated Guest Networks.

Cisco ASA 1000v firewall is not supported on VPC.

Cisco ASA 1000v firewall is not supported for load balancing.

When a guest network is created with Cisco VNMC firewall provider, an additional public IP is acquired along with the Source NAT IP. The Source NAT IP is used for the rules, whereas the additional IP is used to for the ASA outside interface. Ensure that this additional public IP is not released. You can identify this IP as soon as the network is in implemented state and before acquiring any further public IPs. The additional IP is the one that is not marked as Source NAT. You can find the IP used for the ASA outside interface by looking at the Cisco VNMC used in your guest network.

Use the public IP address range from a single subnet. You cannot add IP addresses from different subnets.

Only one ASA instance per VLAN is allowed because multiple VLANS cannot be trunked to ASA ports. Therefore, you can use only one ASA instance in a guest network.

Only one Cisco VNMC per zone is allowed.

Supported only in Inline mode deployment with load balancer.

The ASA firewall rule is applicable to all the public IPs in the guest network. Unlike the firewall rules created on virtual router, a rule created on the ASA device is not tied to a specific public IP.

Use a version of Cisco Nexus 1000v dvSwitch that support the vservice command. For example: nexus-1000v.4.2.1.SV1.5.2b.bin

Cisco VNMC requires the vservice command to be available on the Nexus switch to create a guest network in CloudStack.

Configure Cisco Nexus 1000v dvSwitch in a vCenter environment.

Create Port profiles for both internal and external network interfaces on Cisco Nexus 1000v dvSwitch. Note down the inside port profile, which needs to be provided while adding the ASA appliance to CloudStack.

For information on configuration, see Configuring a vSphere Cluster with Nexus 1000v Virtual Switch.

Deploy and configure Cisco VNMC.

For more information, see Installing Cisco Virtual Network Management Center and Configuring Cisco Virtual Network Management Center.

Register Cisco Nexus 1000v dvSwitch with Cisco VNMC.

For more information, see Registering a Cisco Nexus 1000V with Cisco VNMC.

Create Inside and Outside port profiles in Cisco Nexus 1000v dvSwitch.

For more information, see Configuring a vSphere Cluster with Nexus 1000v Virtual Switch.

Deploy and Cisco ASA 1000v appliance.

For more information, see Setting Up the ASA 1000V Using VNMC.

Typically, you create a pool of ASA 1000v appliances and register them with CloudStack.

Specify the following while setting up a Cisco ASA 1000v instance:

- VNMC host IP.

- Ensure that you add ASA appliance in VNMC mode.

- Port profiles for the Management and HA network interfaces. This need to be pre-created on Cisco Nexus 1000v dvSwitch.

- Internal and external port profiles.

- The Management IP for Cisco ASA 1000v appliance. Specify the gateway such that the VNMC IP is reachable.

- Administrator credentials

- VNMC credentials

Register Cisco ASA 1000v with VNMC.

After Cisco ASA 1000v instance is powered on, register VNMC from the ASA console.

Ensure that all the prerequisites are met.

See “Prerequisites”.

Add a VNMC instance.

Add a ASA 1000v instance.

See adding-an-asa-1000v-instance.

Create a Network Offering and use Cisco VNMC as the service provider for desired services.

Create an Isolated Guest Network by using the network offering you just created.

Adding a VNMC Instance¶

Log in to the CloudStack UI as administrator.

In the left navigation bar, click Infrastructure.

In Zones, click View More.

Choose the zone you want to work with.

Click the Physical Network tab.

In the Network Service Providers node of the diagram, click Configure.

You might have to scroll down to see this.

Click Cisco VNMC.

Click View VNMC Devices.

Click the Add VNMC Device and provide the following:

- Host: The IP address of the VNMC instance.

- Username: The user name of the account on the VNMC instance that CloudStack should use.

- Password: The password of the account.

Click OK.

Adding an ASA 1000v Instance¶

Log in to the CloudStack UI as administrator.

In the left navigation bar, click Infrastructure.

In Zones, click View More.

Choose the zone you want to work with.

Click the Physical Network tab.

In the Network Service Providers node of the diagram, click Configure.

You might have to scroll down to see this.

Click Cisco VNMC.

Click View ASA 1000v.

Click the Add CiscoASA1000v Resource and provide the following:

Host: The management IP address of the ASA 1000v instance. The IP address is used to connect to ASA 1000V.

Inside Port Profile: The Inside Port Profile configured on Cisco Nexus1000v dvSwitch.

Cluster: The VMware cluster to which you are adding the ASA 1000v instance.

Ensure that the cluster is Cisco Nexus 1000v dvSwitch enabled.

Click OK.

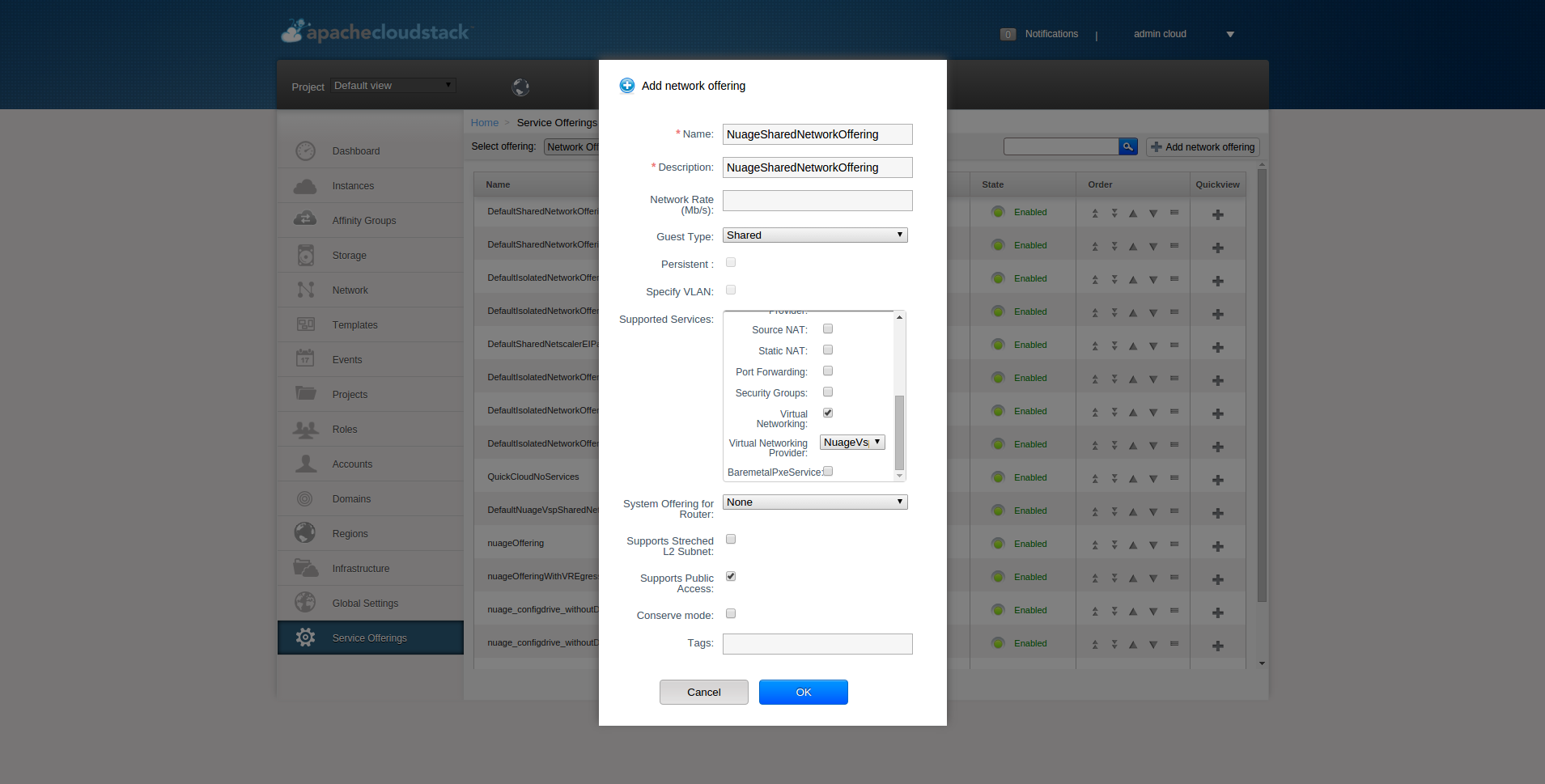

Creating a Network Offering Using Cisco ASA 1000v¶

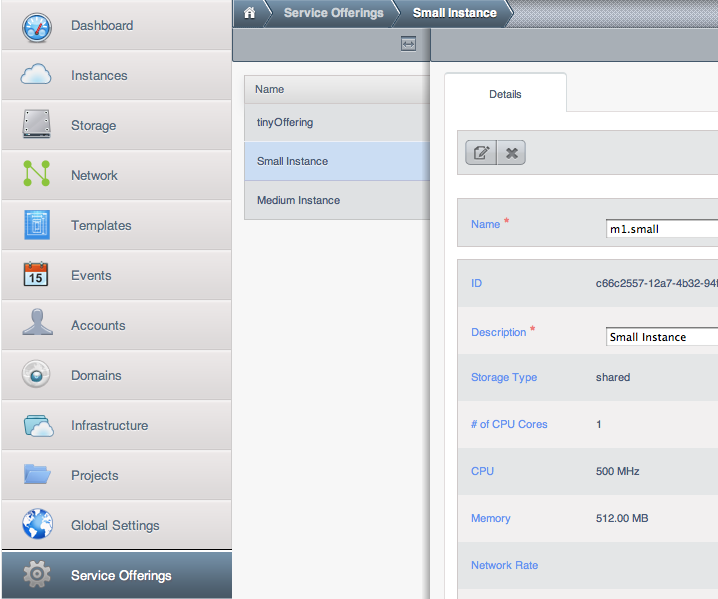

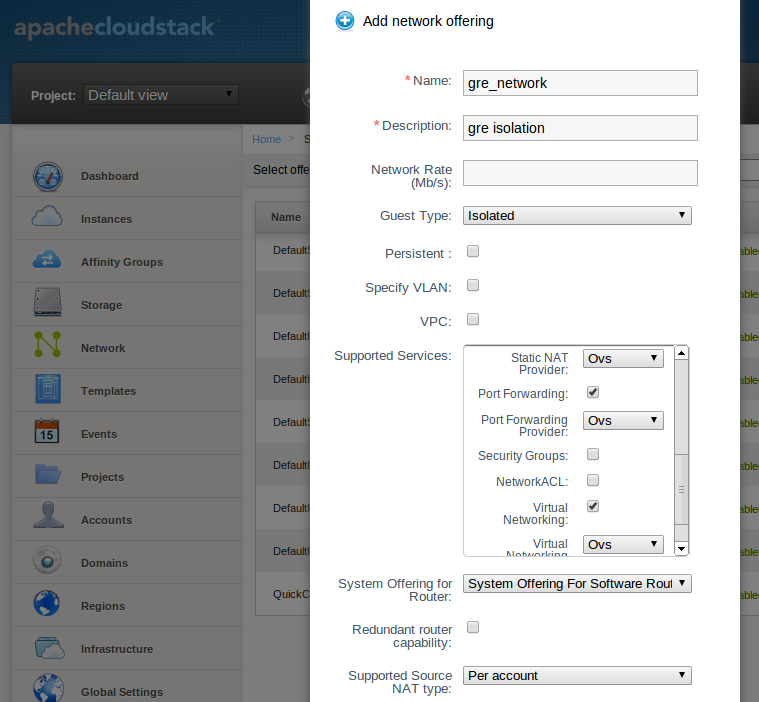

To have Cisco ASA 1000v support for a guest network, create a network offering as follows:

Log in to the CloudStack UI as a user or admin.

From the Select Offering drop-down, choose Network Offering.

Click Add Network Offering.

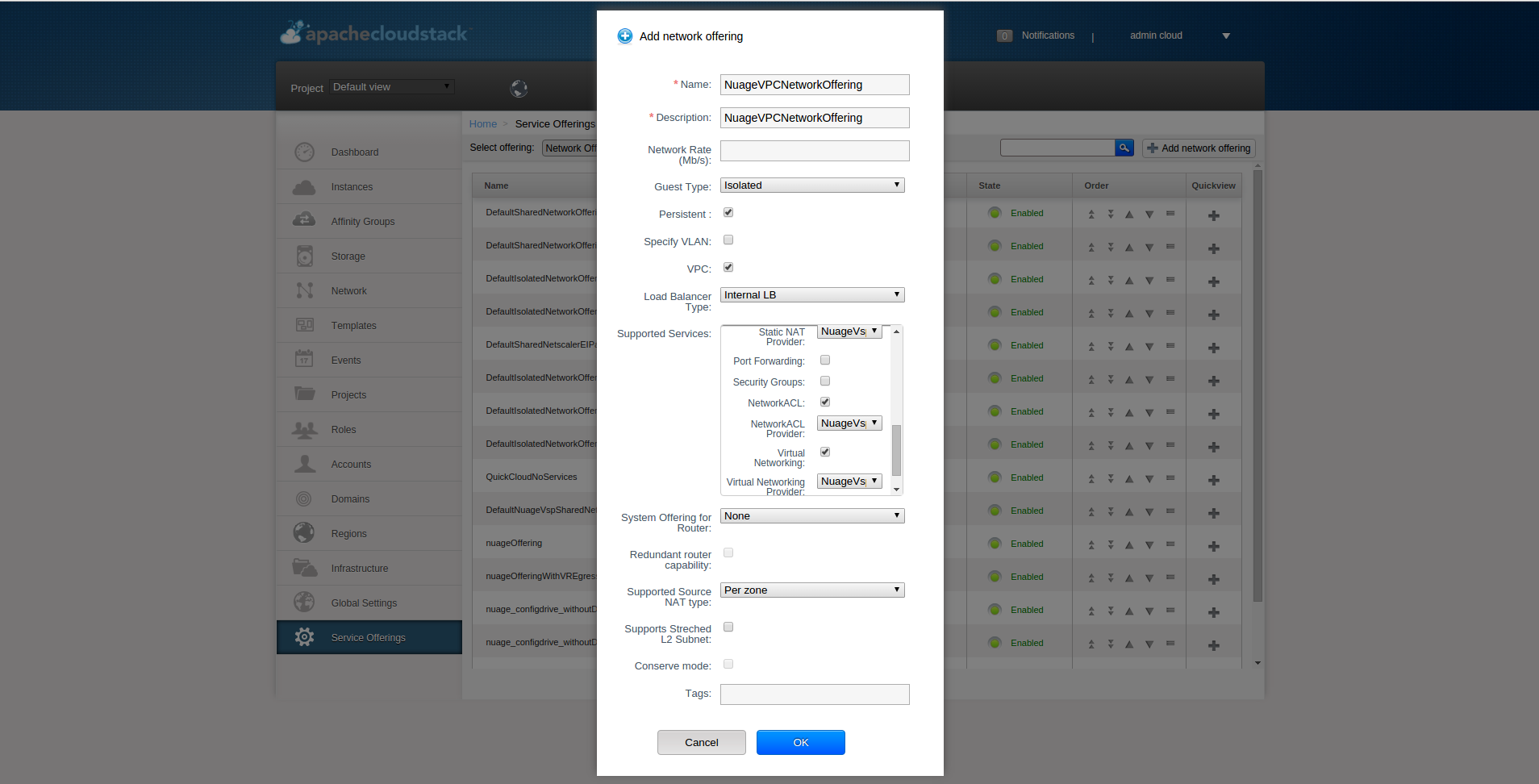

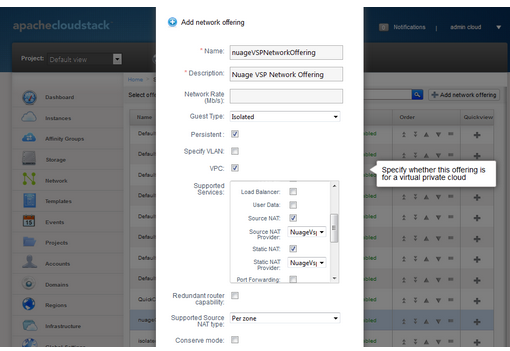

In the dialog, make the following choices:

- Name: Any desired name for the network offering.

- Description: A short description of the offering that can be displayed to users.

- Network Rate: Allowed data transfer rate in MB per second.

- Traffic Type: The type of network traffic that will be carried on the network.

- Guest Type: Choose whether the guest network is isolated or shared.

- Persistent: Indicate whether the guest network is persistent or not. The network that you can provision without having to deploy a VM on it is termed persistent network.

- VPC: This option indicate whether the guest network is Virtual Private Cloud-enabled. A Virtual Private Cloud (VPC) is a private, isolated part of CloudStack. A VPC can have its own virtual network topology that resembles a traditional physical network. For more information on VPCs, see :ref: about-vpc.

- Specify VLAN: (Isolated guest networks only) Indicate whether a VLAN should be specified when this offering is used.

- Supported Services: Use Cisco VNMC as the service provider for Firewall, Source NAT, Port Forwarding, and Static NAT to create an Isolated guest network offering.

- System Offering: Choose the system service offering that you want virtual routers to use in this network.

- Conserve mode: Indicate whether to use conserve mode. In this mode, network resources are allocated only when the first virtual machine starts in the network.

Click OK

The network offering is created.

Reusing ASA 1000v Appliance in new Guest Networks¶

You can reuse an ASA 1000v appliance in a new guest network after the necessary cleanup. Typically, ASA 1000v is cleaned up when the logical edge firewall is cleaned up in VNMC. If this cleanup does not happen, you need to reset the appliance to its factory settings for use in new guest networks. As part of this, enable SSH on the appliance and store the SSH credentials by registering on VNMC.

Open a command line on the ASA appliance:

Run the following:

ASA1000V(config)# reload

You are prompted with the following message:

System config has been modified. Save? [Y]es/[N]o:"

Enter N.

You will get the following confirmation message:

"Proceed with reload? [confirm]"Restart the appliance.

Register the ASA 1000v appliance with the VNMC:

ASA1000V(config)# vnmc policy-agent ASA1000V(config-vnmc-policy-agent)# registration host vnmc_ip_address ASA1000V(config-vnmc-policy-agent)# shared-secret key where key is the shared secret for authentication of the ASA 1000V connection to the Cisco VNMC

External Guest Load Balancer Integration (Optional)¶

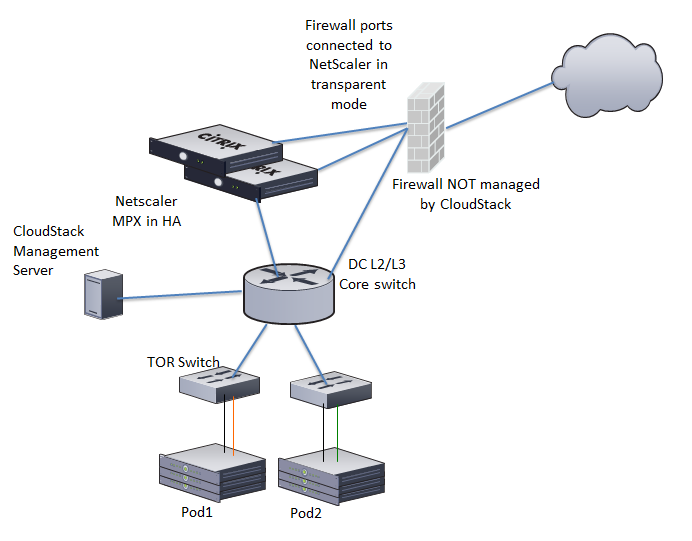

CloudStack can optionally use a Citrix NetScaler or BigIP F5 load balancer to provide load balancing services to guests. If this is not enabled, CloudStack will use the software load balancer in the virtual router.

To install and enable an external load balancer for CloudStack management:

Set up the appliance according to the vendor’s directions.

Connect it to the networks carrying public traffic and management traffic (these could be the same network).

Record the IP address, username, password, public interface name, and private interface name. The interface names will be something like “1.1” or “1.2”.

Make sure that the VLANs are trunked to the management network interface.

After the CloudStack Management Server is installed, log in as administrator to the CloudStack UI.

In the left navigation bar, click Infrastructure.

In Zones, click View More.

Choose the zone you want to work with.

Click the Network tab.

In the Network Service Providers node of the diagram, click Configure. (You might have to scroll down to see this.)

Click NetScaler or F5.

Click the Add button (+) and provide the following:

For NetScaler:

- IP Address: The IP address of the SRX.

- Username/Password: The authentication credentials to access the device. CloudStack uses these credentials to access the device.

- Type: The type of device that is being added. It could be F5 Big Ip Load Balancer, NetScaler VPX, NetScaler MPX, or NetScaler SDX. For a comparison of the NetScaler types, see the CloudStack Administration Guide.

- Public interface: Interface of device that is configured to be part of the public network.

- Private interface: Interface of device that is configured to be part of the private network.

- Number of retries. Number of times to attempt a command on the device before considering the operation failed. Default is 2.

- Capacity: The number of networks the device can handle.

- Dedicated: When marked as dedicated, this device will be dedicated to a single account. When Dedicated is checked, the value in the Capacity field has no significance implicitly, its value is 1.

Click OK.

The installation and provisioning of the external load balancer is finished. You can proceed to add VMs and NAT or load balancing rules.

Management Server Load Balancing¶

CloudStack can use a load balancer to provide a virtual IP for multiple Management Servers. The administrator is responsible for creating the load balancer rules for the Management Servers. The application requires persistence or stickiness across multiple sessions. The following chart lists the ports that should be load balanced and whether or not persistence is required.

Even if persistence is not required, enabling it is permitted.

| Source Port | Destination Port | Protocol | Persistence Required? |

|---|---|---|---|

| 80 or 443 | 8080 (or 20400 with AJP) | HTTP (or AJP) | Yes |

| 8250 | 8250 | TCP | Yes |

| 8096 | 8096 | HTTP | No |

In addition to above settings, the administrator is responsible for setting the ‘host’ global config value from the management server IP to load balancer virtual IP address. If the ‘host’ value is not set to the VIP for Port 8250 and one of your management servers crashes, the UI is still available but the system VMs will not be able to contact the management server.

Topology Requirements¶

Security Requirements¶

The public Internet must not be able to access port 8096 or port 8250 on the Management Server.

Runtime Internal Communications Requirements¶

- The Management Servers communicate with each other to coordinate tasks. This communication uses TCP on ports 8250 and 9090.

- The console proxy VMs connect to all hosts in the zone over the management traffic network. Therefore the management traffic network of any given pod in the zone must have connectivity to the management traffic network of all other pods in the zone.

- The secondary storage VMs and console proxy VMs connect to the Management Server on port 8250. If you are using multiple Management Servers, the load balanced IP address of the Management Servers on port 8250 must be reachable.

Storage Network Topology Requirements¶

The secondary storage NFS export is mounted by the secondary storage VM. Secondary storage traffic goes over the management traffic network, even if there is a separate storage network. Primary storage traffic goes over the storage network, if available. If you choose to place secondary storage NFS servers on the storage network, you must make sure there is a route from the management traffic network to the storage network.

External Firewall Topology Requirements¶

When external firewall integration is in place, the public IP VLAN must still be trunked to the Hosts. This is required to support the Secondary Storage VM and Console Proxy VM.

Advanced Zone Topology Requirements¶

With Advanced Networking, separate subnets must be used for private and public networks.

XenServer Topology Requirements¶

The Management Servers communicate with XenServer hosts on ports 22 (ssh), 80 (HTTP), and 443 (HTTPs).

VMware Topology Requirements¶

- The Management Server and secondary storage VMs must be able to access vCenter and all ESXi hosts in the zone. To allow the necessary access through the firewall, keep port 443 open.

- The Management Servers communicate with VMware vCenter servers on port 443 (HTTPs).

- The Management Servers communicate with the System VMs on port 3922 (ssh) on the management traffic network.

Hyper-V Topology Requirements¶

CloudStack Management Server communicates with Hyper-V Agent by using HTTPS. For secure communication between the Management Server and the Hyper-V host, open port 8250.

KVM Topology Requirements¶

The Management Servers communicate with KVM hosts on port 22 (ssh).

LXC Topology Requirements¶

The Management Servers communicate with LXC hosts on port 22 (ssh).

Guest Network Usage Integration for Traffic Sentinel¶

To collect usage data for a guest network, CloudStack needs to pull the data from an external network statistics collector installed on the network. Metering statistics for guest networks are available through CloudStack’s integration with inMon Traffic Sentinel.

Traffic Sentinel is a network traffic usage data collection package. CloudStack can feed statistics from Traffic Sentinel into its own usage records, providing a basis for billing users of cloud infrastructure. Traffic Sentinel uses the traffic monitoring protocol sFlow. Routers and switches generate sFlow records and provide them for collection by Traffic Sentinel, then CloudStack queries the Traffic Sentinel database to obtain this information

To construct the query, CloudStack determines what guest IPs were in use during the current query interval. This includes both newly assigned IPs and IPs that were assigned in a previous time period and continued to be in use. CloudStack queries Traffic Sentinel for network statistics that apply to these IPs during the time period they remained allocated in CloudStack. The returned data is correlated with the customer account that owned each IP and the timestamps when IPs were assigned and released in order to create billable metering records in CloudStack. When the Usage Server runs, it collects this data.

To set up the integration between CloudStack and Traffic Sentinel:

On your network infrastructure, install Traffic Sentinel and configure it to gather traffic data. For installation and configuration steps, see inMon documentation at Traffic Sentinel Documentation.

In the Traffic Sentinel UI, configure Traffic Sentinel to accept script querying from guest users. CloudStack will be the guest user performing the remote queries to gather network usage for one or more IP addresses.

Click File > Users > Access Control > Reports Query, then select Guest from the drop-down list.

On CloudStack, add the Traffic Sentinel host by calling the CloudStack API command addTrafficMonitor. Pass in the URL of the Traffic Sentinel as protocol + host + port (optional); for example, http://10.147.28.100:8080. For the addTrafficMonitor command syntax, see the API Reference at API Documentation.

For information about how to call the CloudStack API, see the Developer’s Guide at the CloudStack API Developer’s Guide The CloudStack API

Log in to the CloudStack UI as administrator.

Select Configuration from the Global Settings page, and set the following:

direct.network.stats.interval: How often you want CloudStack to query Traffic Sentinel.

Setting Zone VLAN and Running VM Maximums¶

In the external networking case, every VM in a zone must have a unique guest IP address. There are two variables that you need to consider in determining how to configure CloudStack to support this: how many Zone VLANs do you expect to have and how many VMs do you expect to have running in the Zone at any one time.

Use the following table to determine how to configure CloudStack for your deployment.

| guest.vlan.bits | Maximum Running VMs per Zone | Maximum Zone VLANs |

|---|---|---|

| 12 | 4096 | 4094 |

| 11 | 8192 | 2048 |

| 10 | 16384 | 1024 |

| 10 | 32768 | 512 |

Based on your deployment’s needs, choose the appropriate value of guest.vlan.bits. Set it as described in Edit the Global Configuration Settings (Optional) section and restart the Management Server.

Storage Setup¶

Introduction¶

Primary Storage¶

CloudStack is designed to work with a wide variety of commodity and enterprise-rated storage systems. CloudStack can also leverage the local disks within the hypervisor hosts if supported by the selected hypervisor. Storage type support for guest virtual disks differs based on hypervisor selection.

| Storage Type | XenServer | vSphere | KVM |

|---|---|---|---|

| NFS | Supported | Supported | Supported |

| iSCSI | Supported | Supported via VMFS | Supported via Clustered Filesystems |

| Fiber Channel | Supported via Pre-existing SR | Supported | Supported via Clustered Filesystems |

| Local Disk | Supported | Supported | Supported |

The use of the Cluster Logical Volume Manager (CLVM) for KVM is not officially supported with CloudStack.

Secondary Storage¶

CloudStack is designed to work with any scalable secondary storage system. The only requirement is that the secondary storage system supports the NFS protocol. For large, multi-zone deployments, S3 compatible storage is also supported for secondary storage. This allows for secondary storage which can span an entire region, however an NFS staging area must be maintained in each zone as most hypervisors are not capable of directly mounting S3 type storage.

Configurations¶

Small-Scale Setup¶

In a small-scale setup, a single NFS server can function as both primary and secondary storage. The NFS server must export two separate shares, one for primary storage and the other for secondary storage. This could be a VM or physical host running an NFS service on a Linux OS or a virtual software appliance. Disk and network performance are still important in a small scale setup to get a good experience when deploying, running or snapshotting VMs.

Large-Scale Setup¶

In large-scale environments primary and secondary storage typically consist of independent physical storage arrays.

Primary storage is likely to have to support mostly random read/write I/O once a template has been deployed. Secondary storage is only going to experience sustained sequential reads or writes.

In clouds which will experience a large number of users taking snapshots or deploying VMs at the same time, secondary storage performance will be important to maintain a good user experience.

It is important to start the design of your storage with the a rough profile of the workloads which it will be required to support. Care should be taken to consider the IOPS demands of your guest VMs as much as the volume of data to be stored and the bandwidth (MB/s) available at the storage interfaces.

Storage Architecture¶

There are many different storage types available which are generally suitable for CloudStack environments. Specific use cases should be considered when deciding the best one for your environment and financial constraints often make the ‘perfect’ storage architecture economically unrealistic.

Broadly, the architectures of the available primary storage types can be split into 3 types:

Local Storage¶

Local storage works best for pure ‘cloud-era’ workloads which rarely need to be migrated between storage pools and where HA of individual VMs is not required. As SSDs become more mainstream/affordable, local storage based VMs can now be served with the size of IOPS which previously could only be generated by large arrays with 10s of spindles. Local storage is highly scalable because as you add hosts you would add the same proportion of storage. Local Storage is relatively inefficent as it can not take advantage of linked clones or any deduplication.

Network Configuration For Storage¶

Care should be taken when designing your cloud to take into consideration not only the performance of your disk arrays but also the bandwidth available to move that traffic between the switch fabric and the array interfaces.

CloudStack Networking For Storage¶

The first thing to understand is the process of provisioning primary storage. When you create a primary storage pool for any given cluster, the CloudStack management server tells each hosts’ hypervisor to mount the NFS share or (iSCSI LUN). The storage pool will be presented within the hypervisor as a datastore (VMware), storage repository (XenServer/XCP) or a mount point (KVM), the important point is that it is the hypervisor itself that communicates with the primary storage, the CloudStack management server only communicates with the host hypervisor. Now, all hypervisors communicate with the outside world via some kind of management interface – think VMKernel port on ESXi or ‘Management Interface’ on XenServer. As the CloudStack management server needs to communicate with the hypervisor in the host, this management interface must be on the CloudStack ‘management’ or ‘private’ network. There may be other interfaces configured on your host carrying guest and public traffic to/from VMs within the hosts but the hypervisor itself doesn’t/can’t communicate over these interfaces.

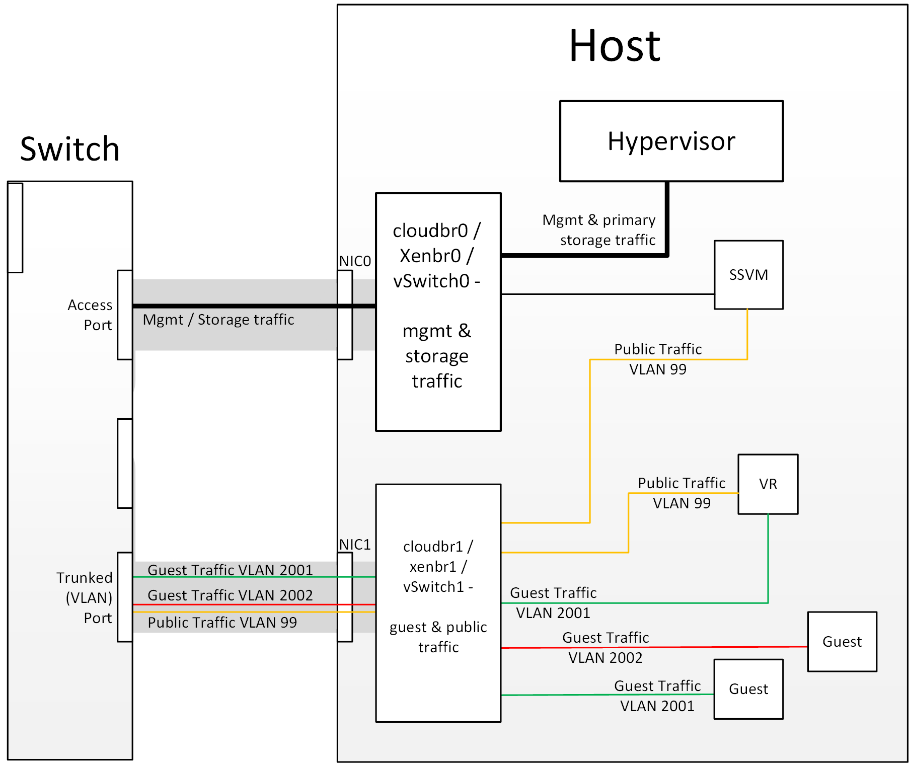

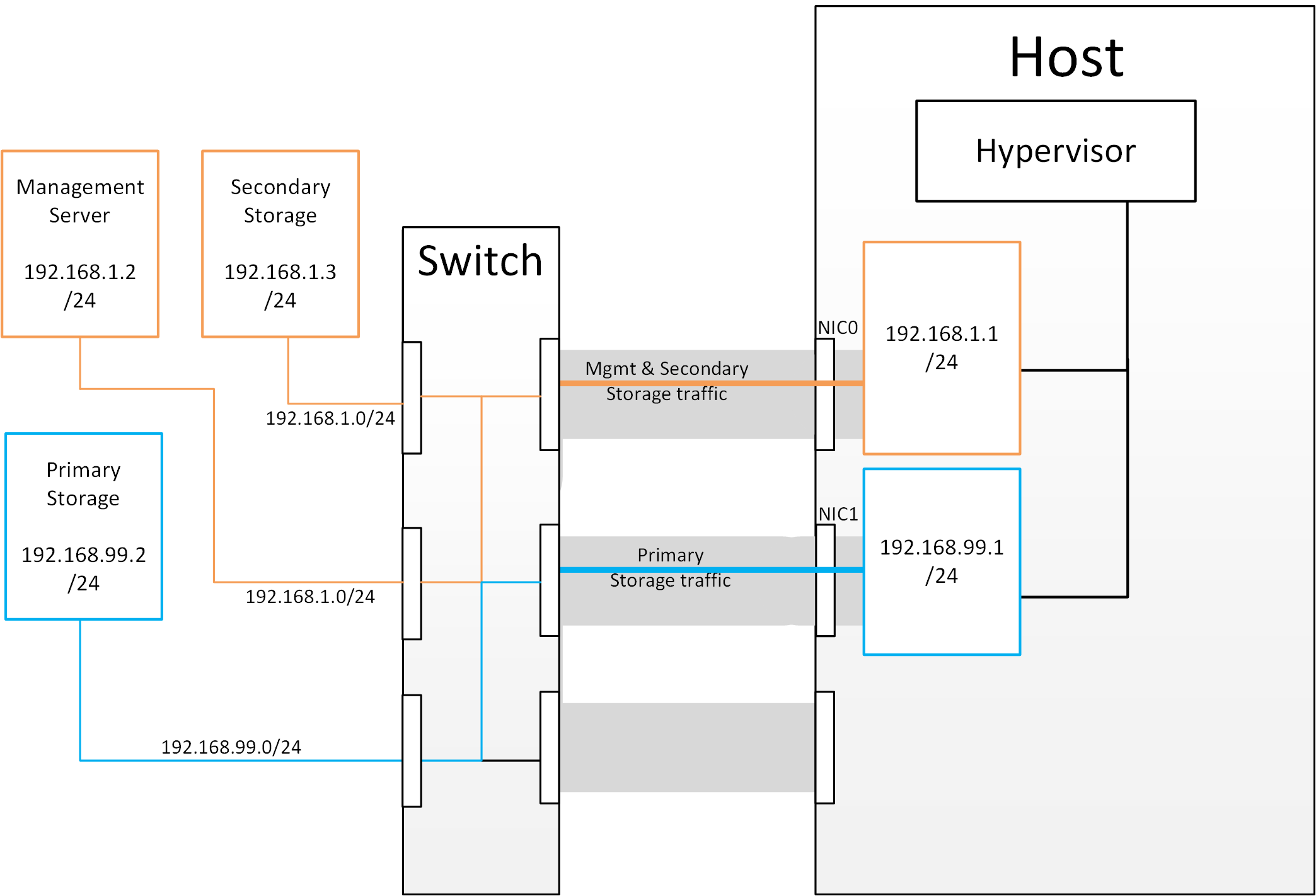

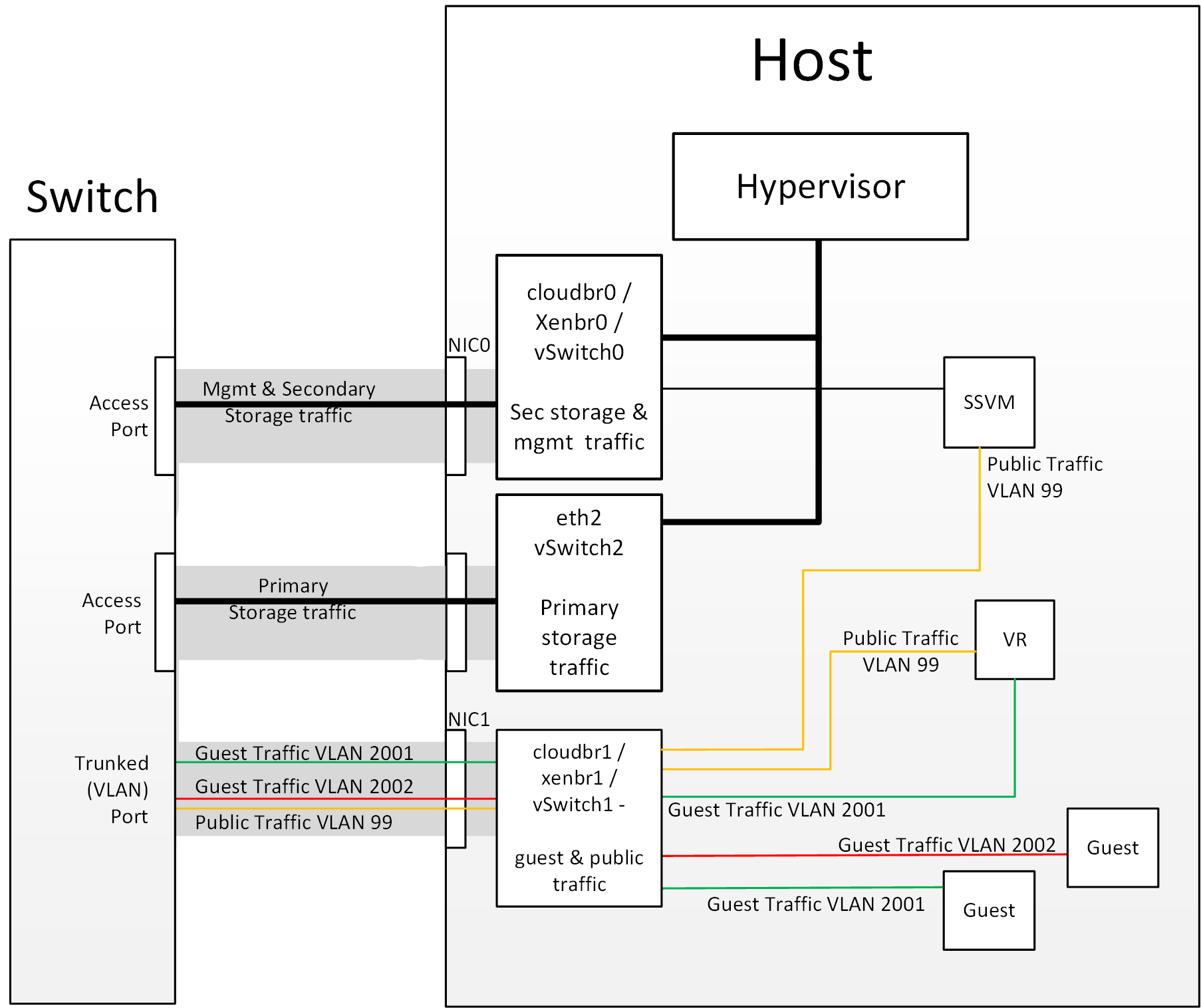

Figure 1: Hypervisor communications

Figure 1: Hypervisor communications

Separating Primary Storage traffic For those from a pure virtualisation background, the concept of creating a specific interface for storage traffic will not be new; it has long been best practice for iSCSI traffic to have a dedicated switch fabric to avoid any latency or contention issues. Sometimes in the cloud(Stack) world we forget that we are simply orchestrating processes that the hypervisors already carry out and that many ‘normal’ hypervisor configurations still apply. The logical reasoning which explains how this splitting of traffic works is as follows:

- If you want an additional interface over which the hypervisor can communicate (excluding teamed or bonded interfaces) you need to give it an IP address.

- The mechanism to create an additional interface that the hypervisor can use is to create an additional management interface

- So that the hypervisor can differentiate between the management interfaces they have to be in different (non-overlapping) subnets